In this second article in the collection of posts on Integrated Deployment where we focus on solving the challenges around productionizing data science - we look at the Model part of the data science life cycle.

In the previous article we covered a simple integrated deployment use case. We first looked at an existing pair of workflows , one that created a model and the second that used that model for production . Then we looked at how to build a training workflow that automatically creates a workflow that can be used in production immediately. To do this, we used the new KNIME Integrated Deployment Extension. That first scenario was quite simple. Things can get more complicated quickly, however, in a real situation.

For example how would the workflow using integrated deployment look if we were training more than one model? How would we flexibly deploy the best one? Assuming that we have to test the training and production workflow on a subset of the data, how would we then retrain and redeploy on a bigger dataset by picking only the interesting pieces of the initial workflow ? Let's see how Integrated Deployment can help us accomplish these tasks.

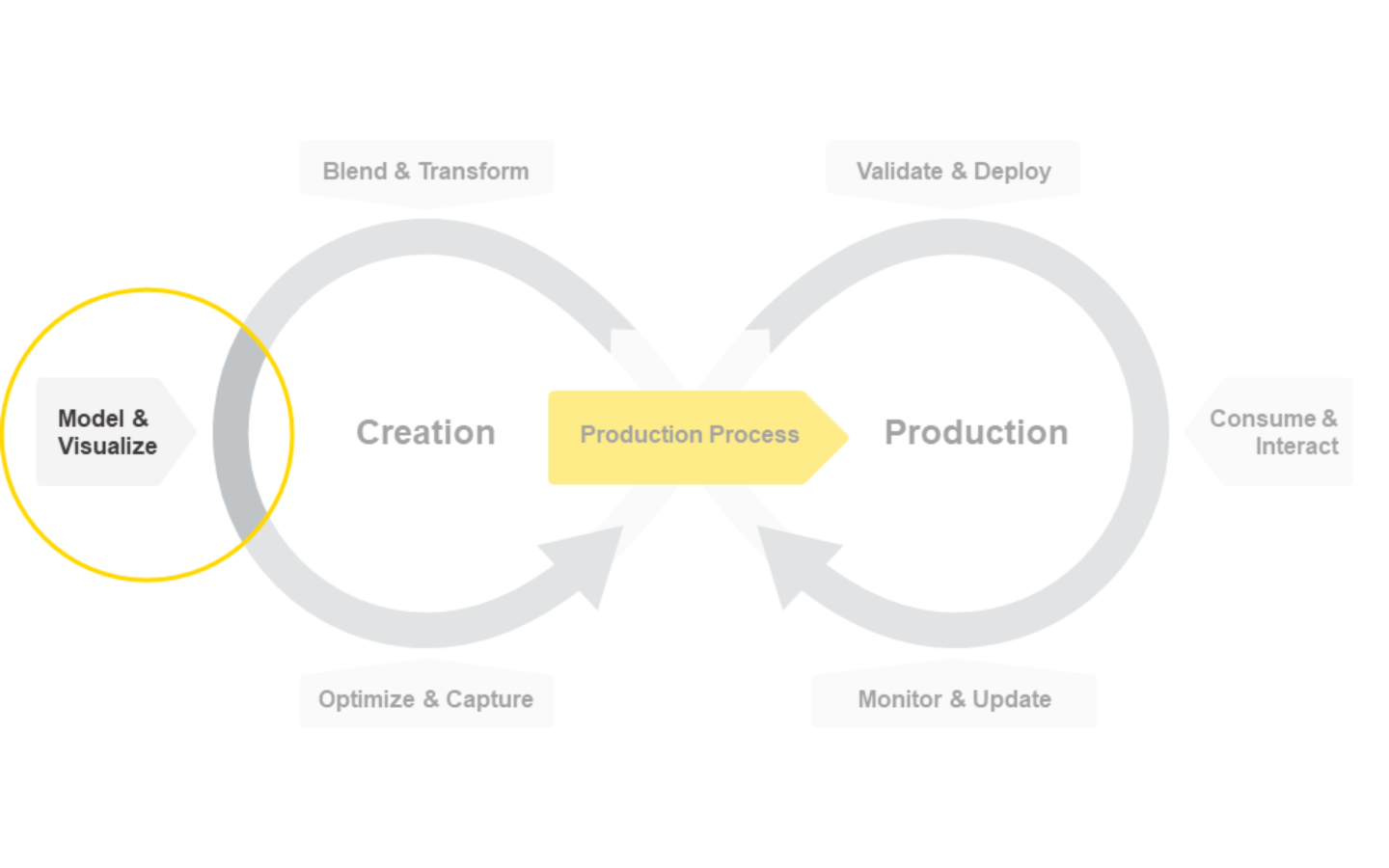

To be able to train multiple models, select the best one, retrain it again, and automatically deploy it we would want to make a hierarchy of workflows: the modeling workflow that generates the training workflow that generates the production workflow (Fig. 1).

Figure 1: A diagram explaining the hierarchical structure of workflows necessary to build an application for selecting the best model and retraining and deploying its production workflow on the fly.

The entire system can be controlled and edited from the modeling workflow thanks to Integrated Deployment. By adding Capture Workflow nodes (Capture Workflow Start and Capture Workflow End) the data scientist is able to select which nodes to use to retrain the selected model and which nodes to deploy it. Furthermore using switches like Case Switch nodes and Empty Table Switch node the data scientist can define the logic on what node should be captured and added to the other two workflows.

Using the framework depicted in Figure 1 becomes especially useful in a business scenario where retraining and redeploying a variety of production workflows takes place in a routine fashion. Without such a framework the data scientist would be required to manually intervene on the workflows each time the deployed model needs to be retrained with different settings.

In Figure 2 an animation shows the modeling workflow - with Integrated Deployment used to capture and execute on demand the training workflow and finally write only the production workflow.

Figure 2: An animation scrolling the Modeling Workflow in all its length.

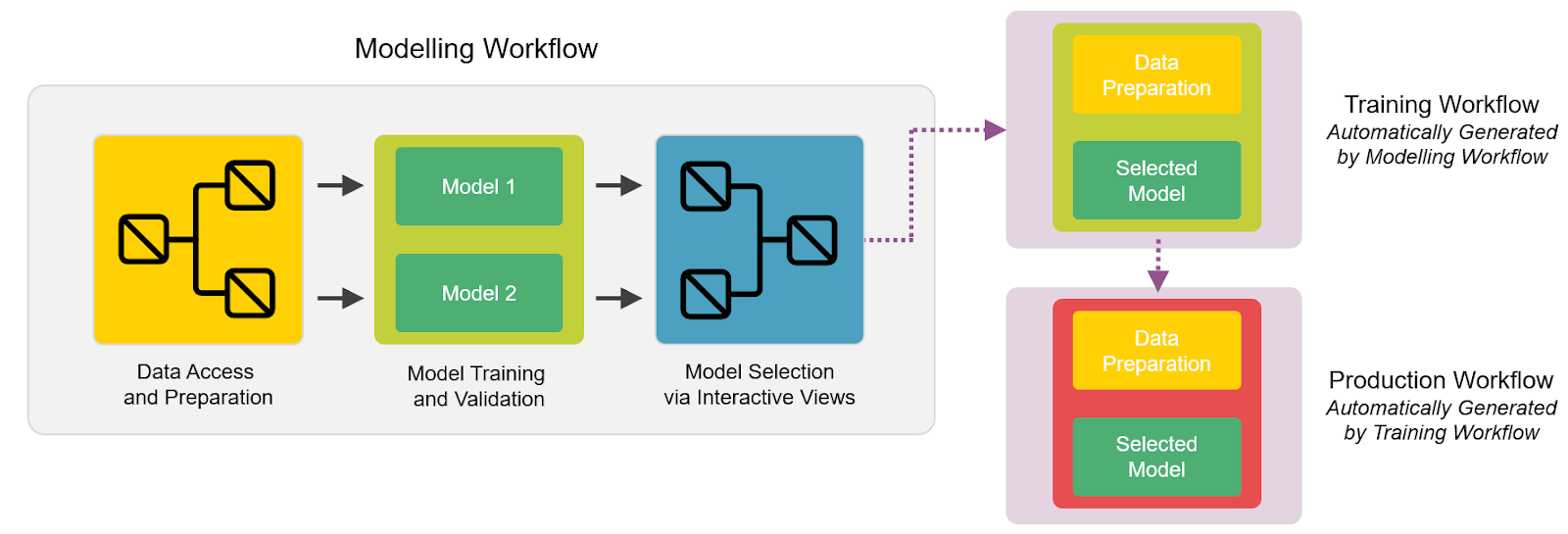

The workflow goes through a standard series of steps to create multiple models, in this case a Random Forest and an XGBoost Model. It then offers an interactive view so that a data scientist can investigate the results and decide which model should be retrained on more data and finally deployed. It covers the steps of the data science life cycle and in addition offers interactive views to the user. The user can select which workflow should be retrained on more data and finally deployed.

At this point you might feel a bit overwhelmed. It’s like that movie about a dream with a dream from 2010, Inception. If that is the case do not worry it will get better.

We will walk through each part of the workflow and display them here in the blog. You might find it more helpful to have the example open in KNIME as you follow along. Remember to have KNIME 4.2 or later installed! The example workflow can be found here on the KNIME Hub.

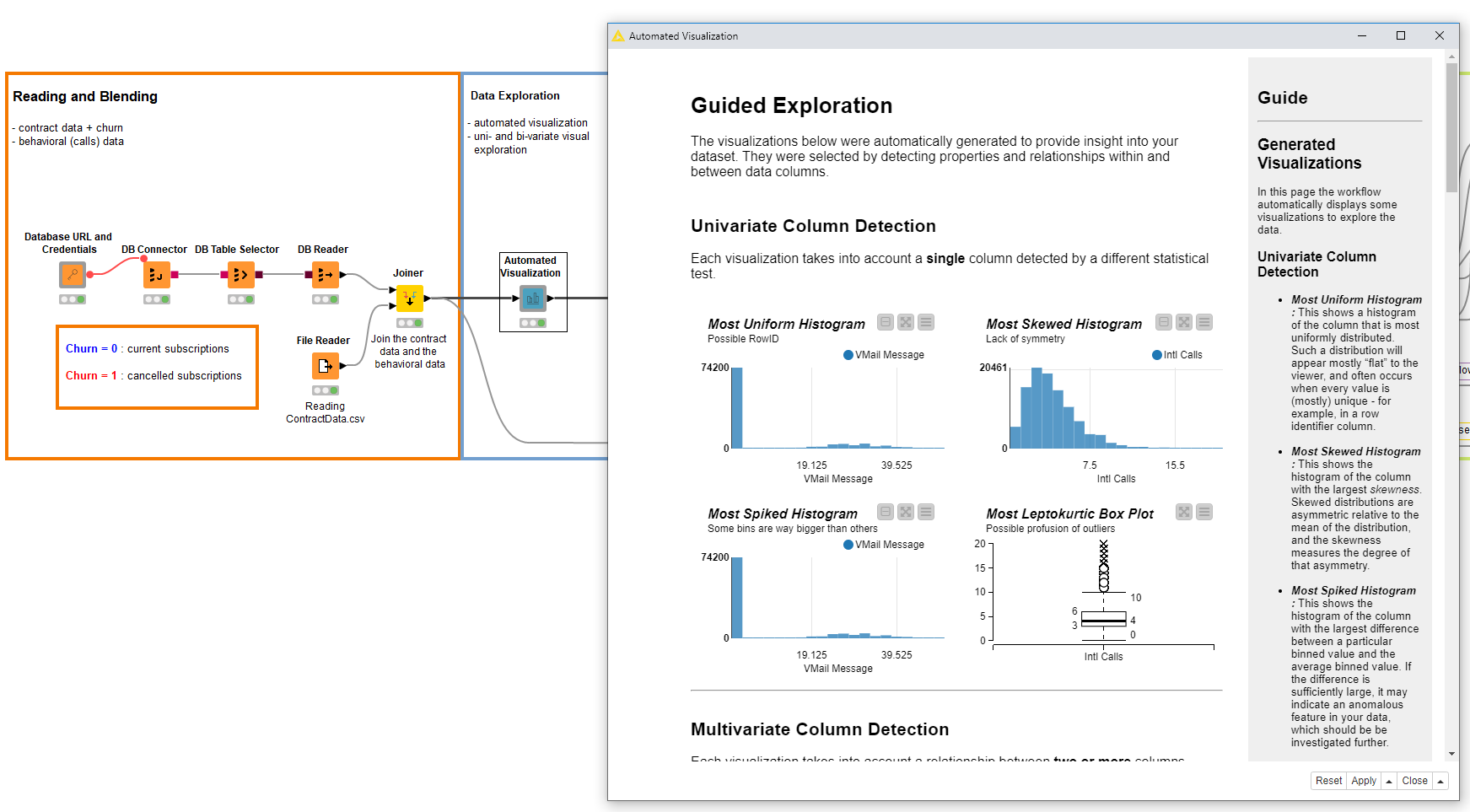

We start by accessing some data as usual from a database and a CSV file and blend them. At this point the data scientist is looking at the data via an interactive composite view called Automated Visualization (Fig.3). This view offers the data scientist a quick way to look at anomalies via a number of charts which automatically display what is most interesting statistically.

Figure 3: The modeling workflow is used to access and blend the data, which is then interactively inspected via an interactive view.

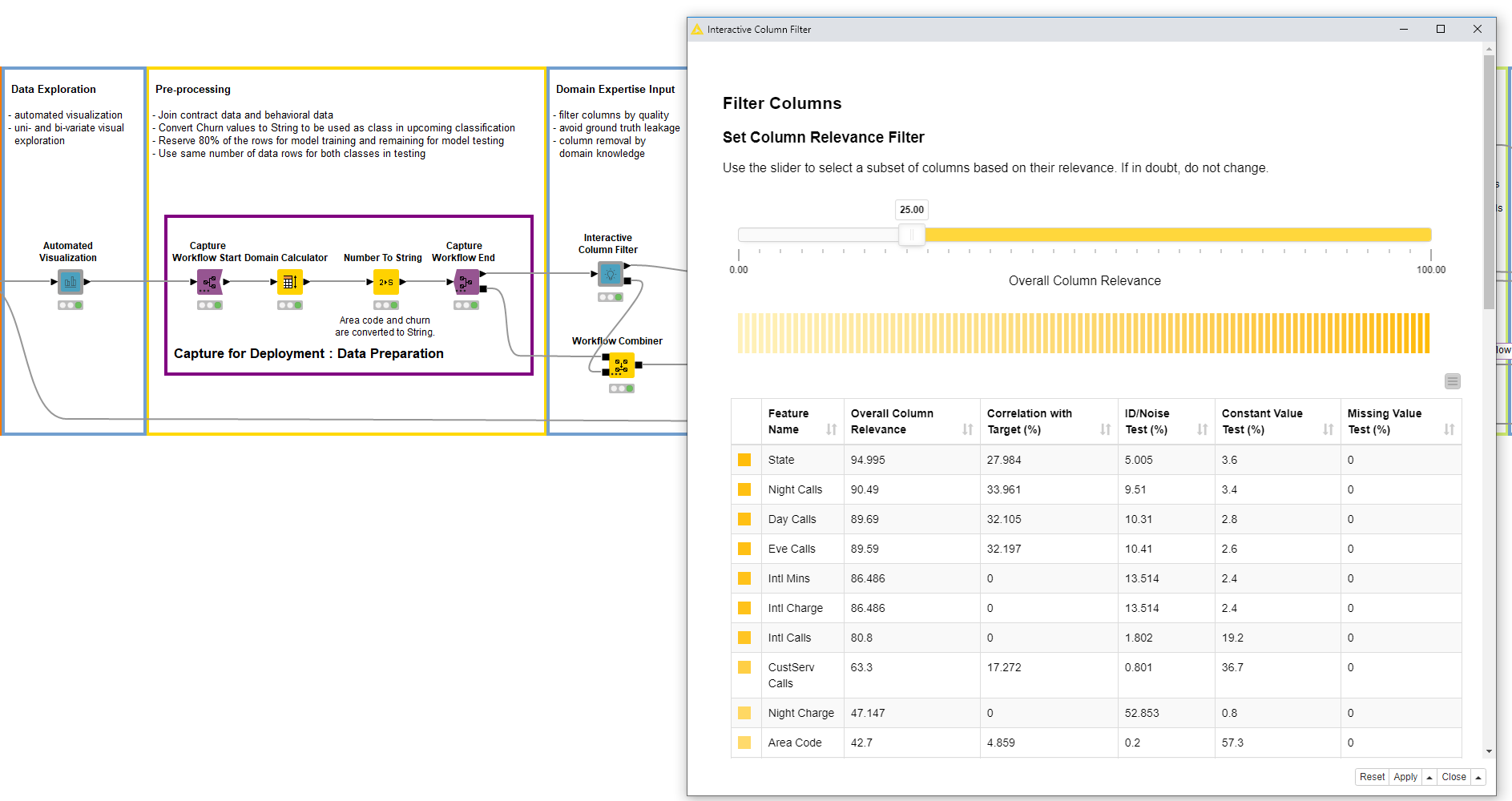

Once that is done the data scientist builds the custom process to prepare the data for training machine learning. In this example we are just going to update the domain of all columns and convert a few columns from numerical to categorical. The data scientist captures the workflow with Integrated Deployment because it will be needed in the future where more data is to be processed. In our example, the data scientist then opens an Interactive Column Filter Component. This view allows for the quick removal of columns which should not be used by the model for all the normal reasons such as too many missing, constant or unique values (Fig. 4).

Figure 4: The modeling workflow continues with the data preparation part which is captured for later deployment . Another interactive view is used to filter out irrelevant columns quickly before the training of the models. Also the interactive filter is captured for deployment purposes.

This data scientist in particular is dealing with an enormous amount of rows. To perform model optimization quickly she wants to subsample the data with a Row Sampling node to use only 10%. The data scientist knows she will need to retrain everything afterwards with more data to get accurate results but for the time being is happy with 10%.

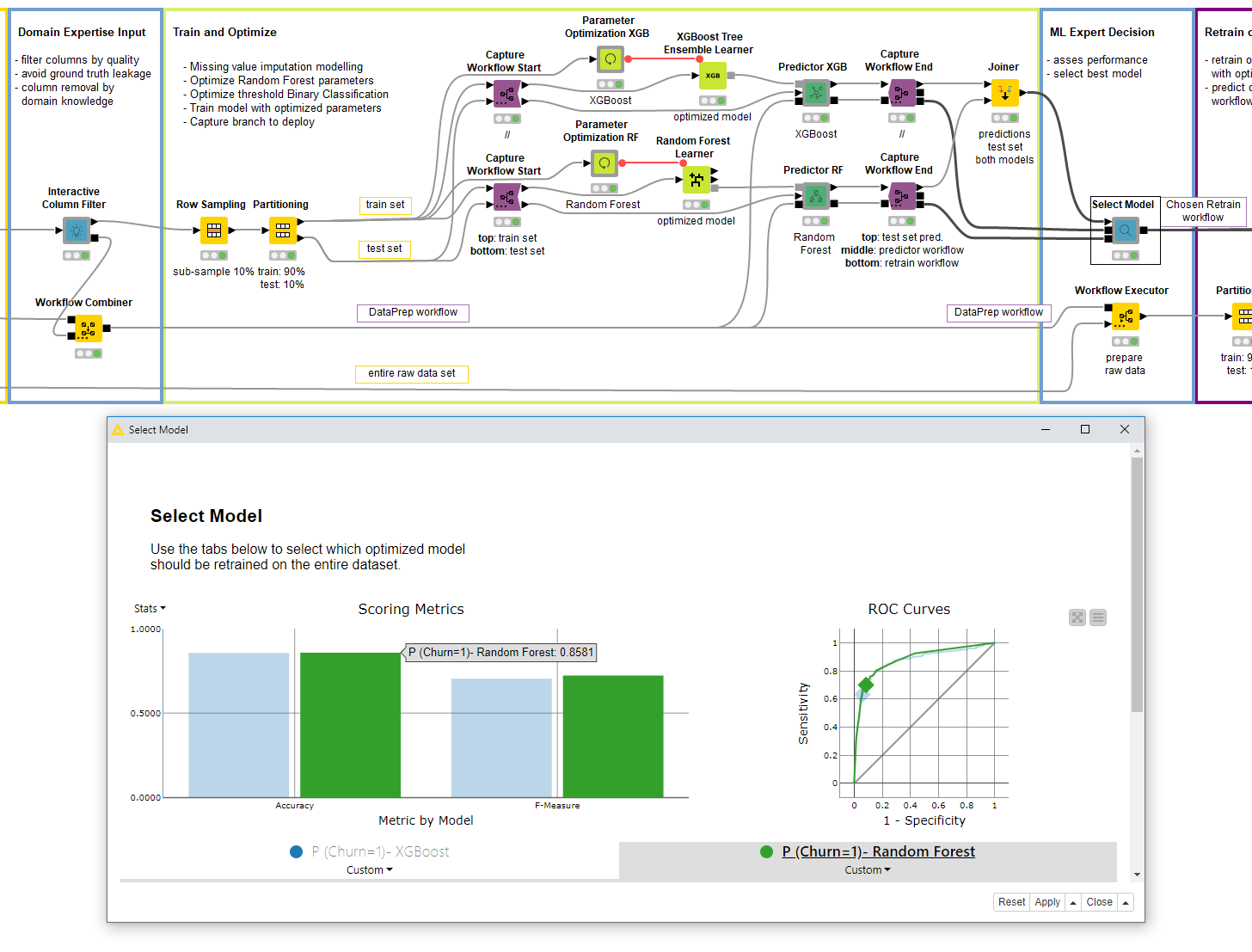

After splitting the sub-sampled data into train and test sets, she trains an XGBoost Model and a Random Forest model with Parameter Optimization on top. To retrain later the data scientist also captures this part with Integrated Deployment. After training the two models she uses yet another interactive view to see which model is better (Fig. 5). The chosen model is Random Forest, which is automatically selected at the Workflow Object port by the Component.

Figure 5: The data scientist can use an interactive view using the Binary Classification Inspector node to browse the two models performance metrics and select one model to be retrained and deployed. The selected model is produced as a Workflow Object at the output of the Component.

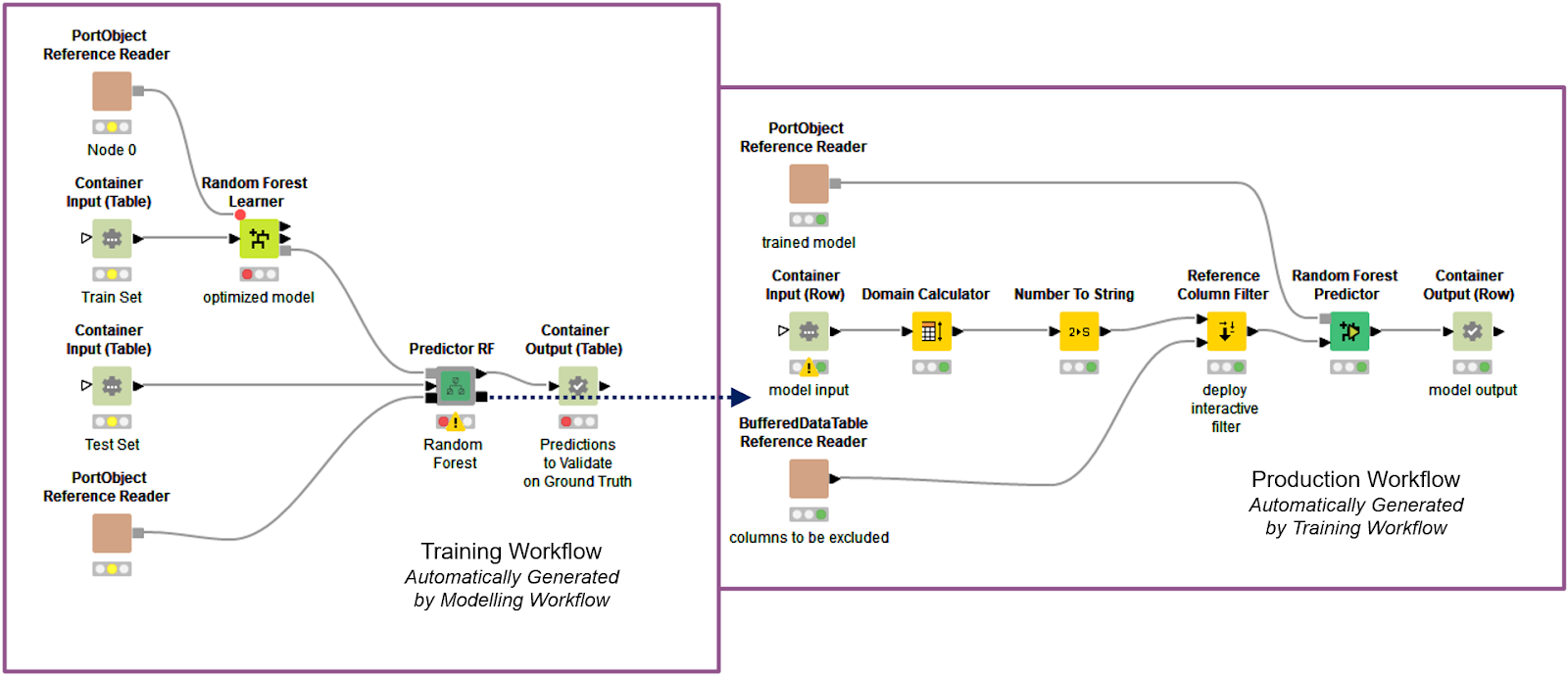

The selected model is retrained on the entire dataset (no 10% subsampling this time) with a Workflow Executor node using the output of the previous component. The previous component produces a training workflow (Fig. 6). When executing the training workflow, the output from its Workflow Executor node is the production workflow (Fig. 6). And there you have it: a complete and automated example of continuous deployment! Hopefully you don’t have that overwhelmed feeling anymore.

Figure 6: The selected Random Forest model is being written and executed as a training workflow on the entire dataset. Only then is a new production workflow generated and passed on as a later step in the modeling workflow .

The production workflow and the scored new test set is used in the last interactive view (Fig. 7) to see the new performance, as this time more data was used. Two buttons are provided: the first one enables the download for the production workflow in .knwf format, the second one offers the ability to deploy it to KNIME Server and save a local copy of the produced production workflow.

Figure 7: The final interactive view of the modeling workflow used to inspect the performance on the entire dataset and decide whether to deploy or not the model. The workflow to be deployed can also be downloaded as a .knwf file.

This same workflow can be deployed to KNIME WebPortal via KNIME Server as an interactive Guided Analytics application. The application can be used by data scientists whenever they need to go through a continuous deployment procedure similar to other CI/CD solutions for machine learning. Of course this particular application is hard coded for this particular machine learning use case, but you can modify this example to suit your needs.

Stay tuned for the next episodes of the Integrated Deployment Blog Series where we show how to use Integrated Deployment for a more complete and flexible AutoML solution.

The Integrated Deployment KNIME Blog Articles

Explore the collection of articles on the topic of Integrated Deployment.