There's no denying that we're undergoing an AI revolution powered by large language models (LLMs). A class of deep neural networks, these systems are mostly used to understand and generate human language, and to mimic conversational behaviors. They are called “large” because of the size of their parameters, which range from hundreds of millions to even trillions.

Some of the best-known LLMs include OpenAI’s ChatGPT and Google’s Bard. These LLMs are proprietary solutions. That means there’s no transparency. Their source code, architecture, and inference strategy can’t be inspected, which raises concerns over data security and privacy.

Open source LLMs have gained traction as a result of these data security and privacy concerns. With an open source LLM, the source code, architecture, and inference strategy can be inspected, facilitating auditing and customization. Open source LLMs have the additional advantage of being free to use, even if there are sometimes licensing restrictions for commercial purposes.

In this article, we give you an overview of four popular open source LLMs – Llama 2, Bloom, Claude 2, and Falcon, a short list of criteria to consider to help you choose which one to use for your use case, and pointers to how you can apply them using the low-code data science tool, KNIME Analytics Platform.

Open source LLMs: Llama 2, Bloom, Claude 2 & Falcon

We chose to give you an overview of Llama 2, Bloom, Claude 2, and Falcon as these models have evolved consistently in the past year and are often as competitive as proprietary solutions.

As technology keeps changing at a very fast pace, we are sure that the tools mentioned in this article will soon be outperformed by newer ones. However, we see value in mentioning some of their features (e.g., multilingual support, ability to be extended and customized) and use cases (e.g., text completion, question answering) because these will likely stay relevant for a long time.

Open source LLMs are often built off of earlier models, using a more refined architecture, larger training data, and sometimes sets of rules or principles to increase their safety. This building process will also likely be popular for a while.

Let’s get started!

Llama 2

Meta introduced a language model named Llama in February 2023, and its second iteration is Llama 2. This open source LLM can be downloaded and customized for various applications, such as text generation, text editing, question answering, and summarization. Llama 2 needs to be installed locally to be used, which requires a robust infrastructure, but is good from a data privacy standpoint.

Llama 2 has fewer training parameters than models in the GPT family, so depending on the case, its output may not be as complex or nuanced. However, its smaller size also presents a few advantages. Llama 2 can be customized for different tasks locally without requiring as many computational resources, making it more accessible to smaller organizations. It also allegedly executes faster than many other LLMs, including the GPT family. A limitation of Llama 2 is that, by default, it does not support math, coding, or logical reasoning tasks. Overcoming these restraints requires a good amount of technical expertise.

BLOOM

In 2022, French-American AI company Hugging Face released BLOOM, an open source LLM trained to continue text given a prompt. It was developed in collaboration between volunteers from more than 70 countries, along with Hugging Face researchers. In the beginning, its multilingual character uniquely positioned BLOOM in the market, as it was capable of providing accurate text in tens of human and programming languages.

BLOOM is not equipped with conversational abilities like the GPT family, nor is it suitable for question answering. As this task becomes more relevant in the field of LLMs, BLOOM might be slightly less important. However, with its 176 billion parameters, this LLM is still an adequate option for text completion, especially when the language in question is not English.

BLOOM can also be used for translation, since its training corpus is multilingual. This is a desirable feature, but it comes with one disadvantage: comparing BLOOM with other LLMs for text completion can be challenging, since most benchmarks and models are tailored exclusively for English. A few existing comparisons, though, show that BLOOM is likely to perform worse than closed-source models that use reinforcement learning techniques in their training.

Claude 2

Claude 2 is an open source LLM developed by San Francisco-based Anthropic. Like other generative AI models, it can perform many conversational and text processing tasks, such as question answering and summarization. As of December 2023, the tool’s API is only available in the US and UK, with a wider release planned for the near future.

One of Claude 2’s key features is its emphasis on Constitutional AI, a method through which a list of rules or principles is given to the model during training, with the goal of making its output safer and more cautious. By default, Claude 2 focuses on naturally flowing yet harmless conversation, although it can be customized to take direction on both personality and tone. This model is considered to be more conservative than the GPT family, but on the other hand, its interactions can feel more restrictive and less creative.

Claude 2 is also very competent at “memorizing” conversations, and is able to ingest rather long text prompts and make sense of them. Depending on the benchmark, this LLM may even outperform some of the GPT models.

Falcon

The Falcon family of LLMs, developed by the United Arab Emirates’ Technology Innovation Institute (TII), is another open source LLM for text generation, translation (e.g., English to French, or German to Spanish), question answering, and content writing. Falcon can also be used to write code. As of December 2023, the Falcon family contains two smaller models, Falcon 7B and Falcon 40B, and a much larger one named Falcon 180B. They are freely available and can be used by anyone, although commercial usage is very restricted.

With 180 billion training parameters, Falcon 180B is currently the largest open language model in the market. Although comparisons keep changing– and heavily depend on how a benchmark is set up – Falcon 180B seems to outperform Llama 2 in some cases, likely due to its much larger size. Unlike other popular LLMs, Falcon was not built off of LlamA models. Instead, it relies on a distributed architecture trained over a corpus named RefinedWeb, which contains trillions of tokens.

3 criteria to choose the open source LLM for your project

The answer to how to choose which open source LLM to use is determined by your use case, requirements, and resources.

1: Consider the nature of your application

Do you want an open source LLM for code completion, question answering, or text translation? If your use case has a commercial purpose, it is also important to check what models have a suitable license.

2: Consider your technical requirements

Would you rather interact with the LLM through an API, or would you rather enforce data privacy and have the model running locally? Some open source LLMs take longer to perform inference, so also consider how much latency time is reasonable for the use case at hand.

3: Consider your resources

If you have to download a model and perform inference locally, make sure that your infrastructure is sufficiently powerful. If you are going to use an API (e.g., through Hugging Face), a robust internet infrastructure is paramount.

Now you’ve chosen which open source LLM you need, let’s look at how you can use it with KNIME.

How to use open source LLMs with KNIME

KNIME Analytics Platform is a free, open source tool to make sense of your data. It has an intuitive low-code, visual interface that gives users of all skillsets access to advanced data science techniques to build analyses of any complexity level, from automating spreadsheets, to ETL, to machine learning.

KNIME's AI Extension provides functionality to connect to and prompt large language models. You can use it to leverage the power of many open source LLMs. Here's how.

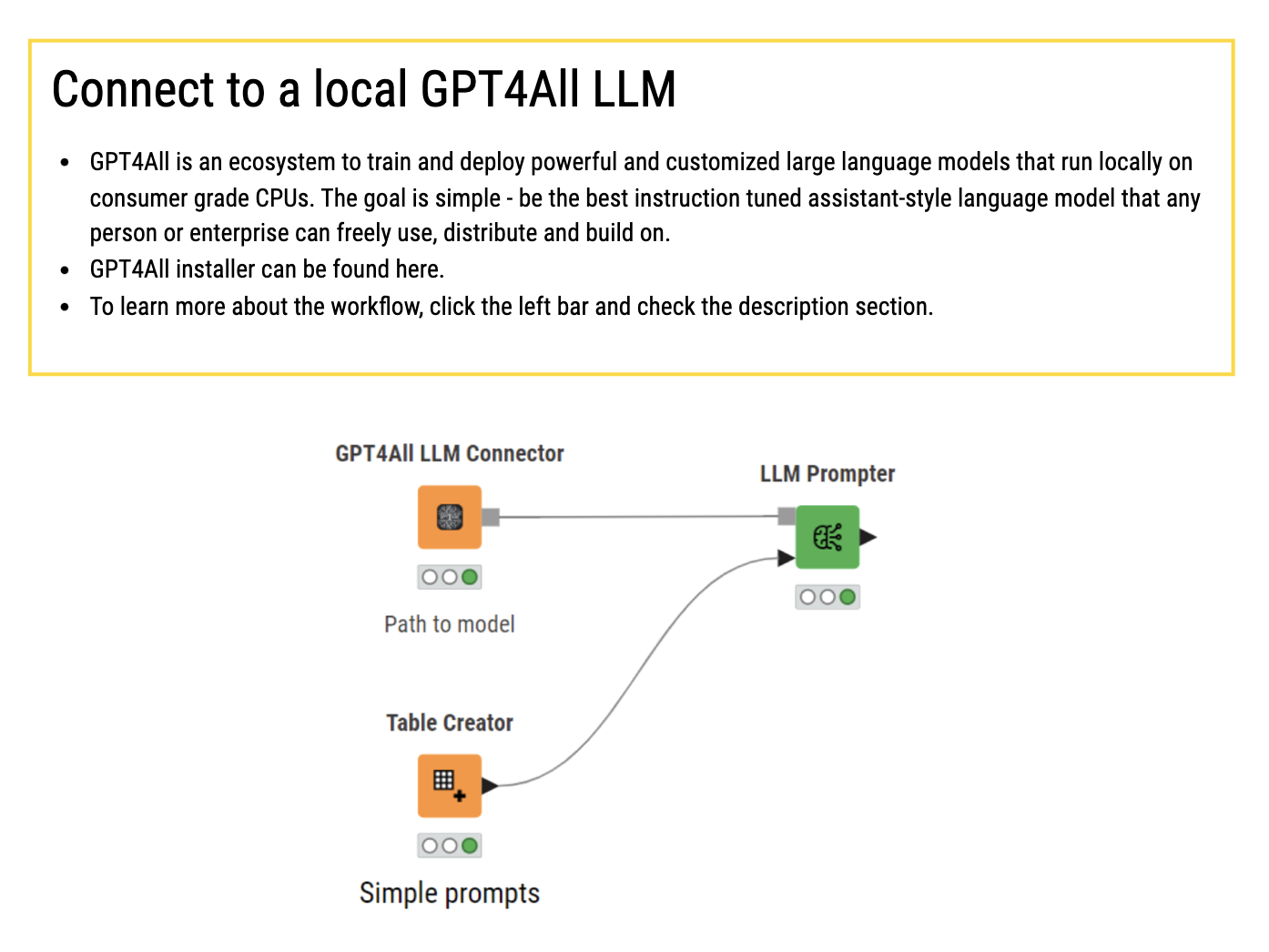

If you want to use an LLM that needs to be executed locally, install GPT4All, download the model, connect to it from KNIME, and you are ready to start your interaction. You can download an example workflow using one of the GPT4All connectors from KNIME Community Hub, here.

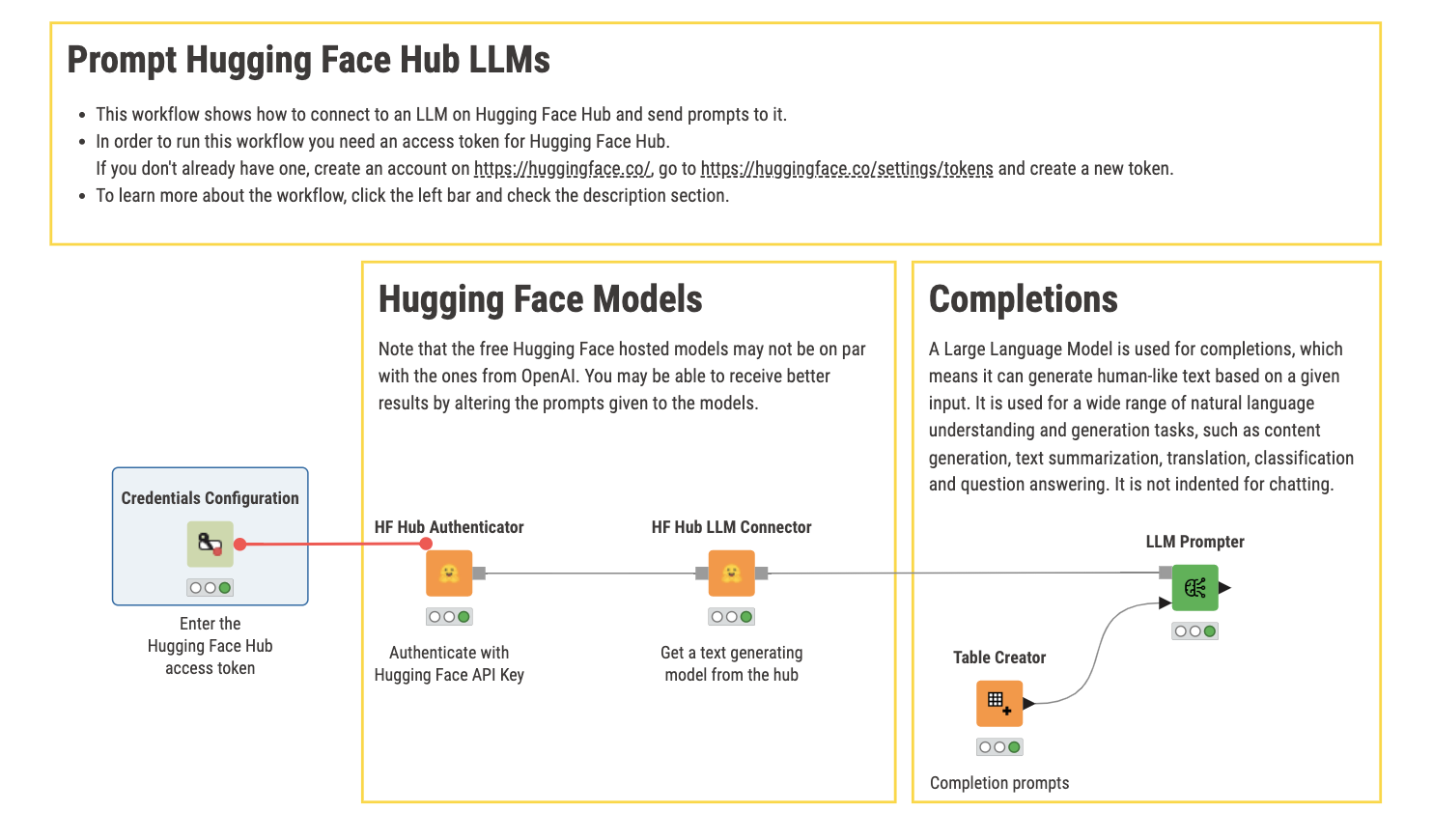

If you are connecting with an LLM API that is available through Hugging Face, the first step is to get an access token for Hugging Face Hub. Upon authentication, you can connect with one of the available models and start prompting information. Here is a simple workflow that does exactly that.

For more examples on how to interact with LLMs through KNIME, please check out our AI Extension example workflows.

Start talking to robots with open source LLMs

This article highlights some of the advantages of open source LLMs: they are more transparent, customizable (even if this requires expertise!), and are usually available for free. Note, however, that not everything about them is positive: LLMs are too powerful a technology, and leaving their source code open can make them more vulnerable to bad players.

Now you are ready to start talking to robots, and to integrate their output into your KNIME applications! Download KNIME.