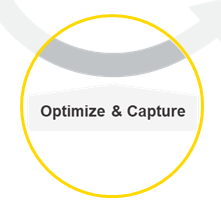

The data scientist doesn’t work in a vacuum but often in teams, research groups, and in companies that need end-to-end data science. We aim to support every step of the data science life cycle to give individuals and teams the means to access data from multiple sources, use and blend favorite tools, data types, and techniques, collaborate easily across technical disciplines in an intuitive environment, and productionize data science solutions quickly and reliably.

For this new release, the developers at KNIME have worked on new features to enhance the options for data science teams at each stage of their data science projects.

Let’s take a walk around the Data Science Life Cycle to look at just some of what’s new.

Greater Data Access Options and Flexible File Handling

The advantage of in-database processing is that the data stays and is operated on in your database without having to be transferred to KNIME. This release sees the addition of the Snowflake Connector node. Connect to your Snowflake instance and visually assemble database statements that then get executed in the Snowflake database.

With increasing numbers of people in the KNIME community working with KNIME in cloud and hybrid environments, the File Handling framework was already rewritten in the last release to give the community not only a consistent experience across all nodes and file systems but also considerable performance improvements.

We’ve now improved type handling in reader nodes such as CSV and Excel, and added an option to append a file identifier when reading multiple files. And we‘ve added a whole bunch of nodes to the new framework plus some new connectors, for example Azure Data Lake Gen 2 Connector and the SMB Connector.

Conda Environment Propagation for R

Previously a capability only available for Python, this node now also ensures the existence of a specific configurable Conda Environment and propagates the environment to downstream R nodes. This is particularly handy when moving to a production environment. You can recreate the Conda environment used on the source machine (e.g., your personal computer) on the target machine (e.g., a KNIME Server instance).

Smooth Execution with Scheduled Retries

There are so many reasons why execution of a scheduled job might fail. A common one is that the job can’t temporarily connect to the third party data source. Now the option to specify a number of retries will ensure that the job still gets executed even if the original run failed.

Speedy and Reliable Production Process

Here we have a great example of a feature that comes in very handy for data scientists who need to productionize their workflows. The KNIME Integrated Deployment extension is now out of labs and ready for your production environments. This extension lets you capture production workflows from the very same workflow you used to create data science. It has two new additions, too: the Workflow Reader and Workflow Summary Extractor nodes. You’ll see these two nodes in use when we publish our Data Science CI/CD blueprints, later this summer.

Embeddable, Shareable, User-Friendly Data Apps

Taking a data science workflow and integrating into an end-user application is non-trivial, often consisting of considerable engineering work.

Data Apps turn months of work into hours. You can now build a data app just like everything else in KNIME: with workflows, no coding required.

Create a multi- or single-page interactive application with components and within the components use widget nodes to design the user interface on each page. You can easily scale adoption of your data apps with shareable links or grab an embed code to integrate into a third-party application.