LSTM recurrent neural networks can be trained to generate free text

As first published in InfoWorld.

Let’s see how well AI can imitate the Bard.

“Many a true word hath been spoken in jest.”

― William Shakespeare, King Lear

“O, beware, my lord, of jealousy;

It is the green-ey’d monster, which doth mock

The meat it feeds on.”

― William Shakespeare, Othello

“There was a star danced, and under that was I born.”

― William Shakespeare, Much Ado About Nothing

Who can write like Shakespeare? Or even spell like Shakespeare? Could we teach AI to write like Shakespeare? Or is this a hopeless task? Can an AI neural network describe despair like King Lear, feel jealousy like Othello, or use humor like Benedick? In theory, there is no reason why not if we can just teach it to.

From MIT’s The Complete Works of William Shakespeare website, I downloaded the texts of three well-known Shakespeare masterpieces: “King Lear,” “Othello,” and “Much Ado About Nothing.” I then trained a deep learning recurrent neural network (RNN) with a hidden layer of long short-term memory (LSTM) units on this corpus to produce free text.

Was the neural network able to learn to write like Shakespeare? And if so, how far did it go in imitating the Bard’s style? Was it able to produce a meaningful text for each one of the characters in the play? In an AI plot, would Desdemona meet King Lear and would this trigger Othello’s jealousy? Would tragedy prevail over comedy? Would each character maintain the same speaking style as in the original theater play?

I am sure you have even more questions. So without further ado, let’s see whether our deep learning network could produce poetry or merely play the fool.

Generating free text with LSTM neural networks

Recurrent neural networks (RNN) have been successfully experimented with to generate free text. The most common neural network architecture for free text generation relies on at least one LSTM layer.

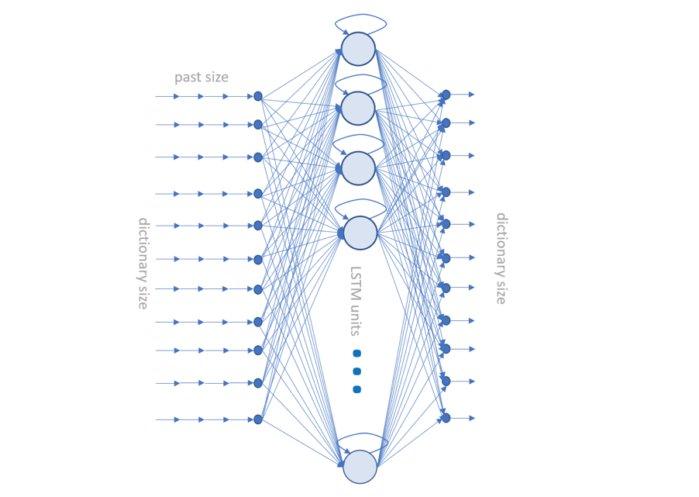

To train our first Shakespeare simulator, I used a neural network of only three layers: an input layer, an LSTM layer, and an output layer (Figure 1).

The network was trained at character level. That is, sequences of m characters were generated from the input texts and fed into the network.

Each character was encoded using hot-zero encoding. This means that each character was represented by a vector of size n, where n is the size of the character set from the input text corpus.

The full input tensor with size [m, n] was fed into the network. The network was trained to associate the next character at position m+1 to the previous m characters.

All of this leads to the following network:

- The input layer with n units would accept [m, n] tensors, where n is the size of the character set and m the number of past samples (in this case characters) to use for the prediction. We arbitrarily chose m=100, estimating that 100 past characters might be sufficient for the prediction of character number 101. The character set size n, of course, depends on the input corpus.

- For the hidden layer, we used 512 LSTM units. A relatively high number of LSTM units is needed to be able to process all of these (past m characters - next character) associations.

- Finally, the last layer included n softmax activated units, where n is the character set size again. Indeed, this layer is supposed to produce the array of probabilities for each one of the characters in the dictionary. Therefore, n output units, one for each character probability.

Figure 1. The deep learning LSTM-based neural network we used to generate free text. n input neurons, 512 hidden LSTM units, an output layer of n softmax units where n is the character set size, in this case the number of characters used in the training set.

Notice that in order to avoid overfitting, an intermediate dropout layer was temporarily introduced during training between the LSTM layer and the output dense layer. A dropout layer chooses to remove some random units during each iteration of the training phase. The dropout layer was then removed for deployment.

Building, training, and deploying the neural network

The network was trained on the full texts of “King Lear,” “Othello,” and “Much Ado About Nothing,” available from The Complete Works of William Shakespeare website, a total of 13,298 sentences.

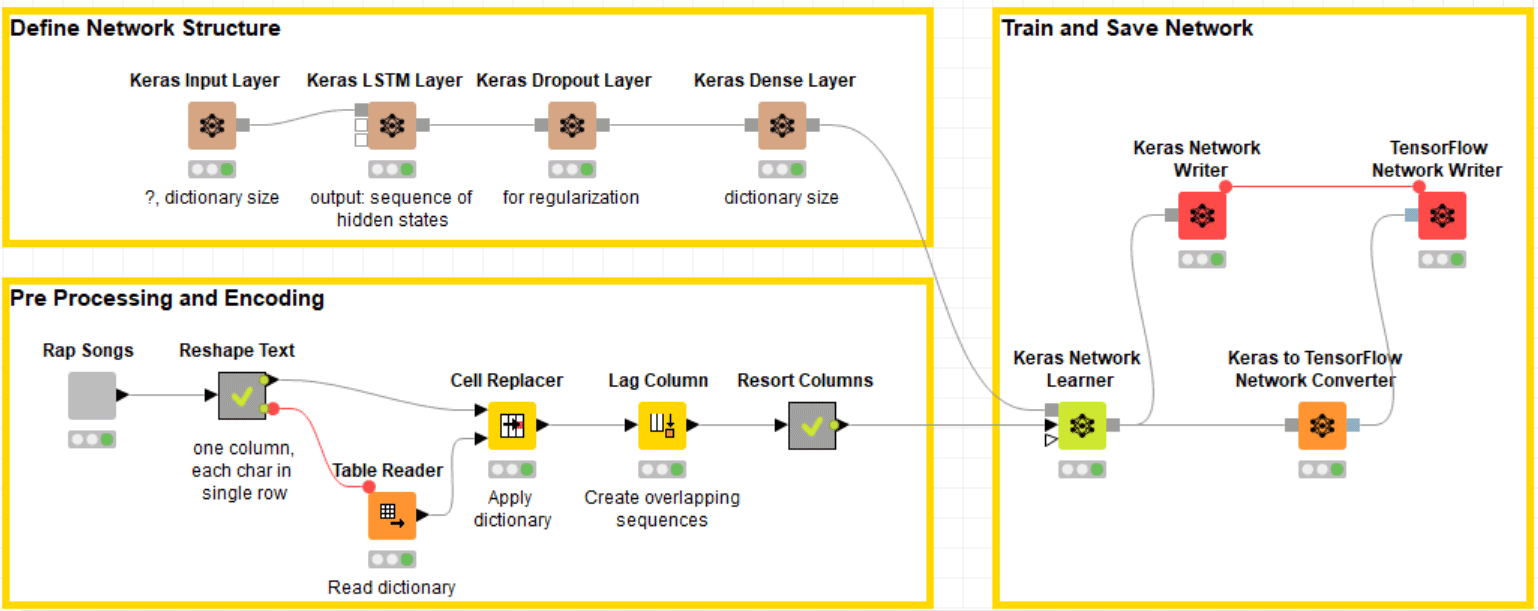

The neural network described above was built, trained, and deployed using the GUI-based integration of Keras and TensorFlow provided by KNIME Analytics Platform.

- The workflow to build and train the network is shown in Figure 2.

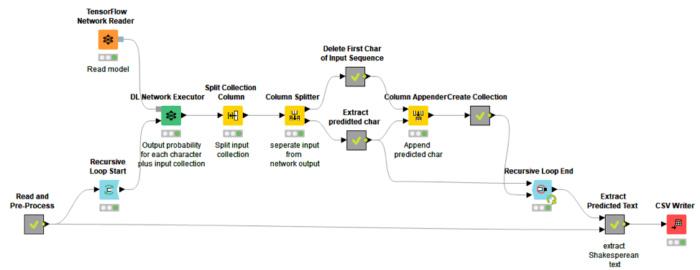

- The workflow to deploy the network to predict the final text, character by character, is shown in Figure 3.

These workflows have been copied and adapted from the workflows implemented in the blog post by Kathrin Melcher, “Once Upon a Time … by LSTM Network,” where a similar network was trained and deployed to generate free text, having trained on texts from the Grimm’s fairy tales.

- Download both workflows for free from the KNIME Hub here.

In Figure 2, the brown blocks (nodes) in the Define Network Structure section (top left) build the different layers of the neural network. The data are cleaned, standardized, reshaped, and transformed by the nodes in the Preprocessing and Encoding section (lower left). Finally, the training is carried out by the Keras Network Learner node and the network is stored away for deployment.

Notice that if the training set is large, this network can take quite a long time to train. It is possible to speed it up by pointing KNIME Analytics Platform to a Keras installation for GPUs.

The deployment workflow in Figure 3 reads and uses the previously trained network to predict free text, one character after the next.

Figure 2. The training workflow trains a neural network (62 inputs -> 512 LSTM -> 62 outputs) to predict the next character in the text, based on the previous 100 characters. Training set consists of the texts of “King Lear,” “Othello,” and “Much Ado About Nothing” by William Shakespeare. Thus, the network should learn to build words and sentences in a Shakespearean style.

Figure 3. The deployment workflow takes 100 characters as the start and then generates text character after character in a loop, until 1,000 characters are generated, making the full, final Shakespeare-like text.

AI-generated Shakespearean text

Here are some results, after training the network for 50 epochs on the entire data set—the three selected theater plays—using 100 past characters for the input tensor and recursively generating 1,000 new characters.

Let’s start with 100 characters from a dialog between Othello and Desdemona (in red) and then see how our network would continue (in plain text).

- Othello

O my fair warrior!

- Desdemona

My dear Othello!

- Othello

It gives me wonder great as my content

To see you here before me. O my soul’s joy!

I am a man a worm.

- Gloucester

I know not, sweet:

I found your like a thief from the heart

That pirchas will be well.

The general speaks to be so

Turn a man, I think, besoou.

- Cassio

I pray you, sir, to lie: in this hand is not a tend and talking of it;

I would not be threaten dispatch.

Our good old friend,

Lay comforts the state, seek for him;

I will grife you be faster’d!

And the blessed course of dower:

Net forth enough to do you;

And that the Moor is defective in the letter!

Abhorre, heaven, I will go sor;

And the other too.

- Othello

I have a seet me such a trial of his speech,

That he shall live the Moor in the lies

That with daush’er

Holds it is a most poor man,

Whose welchers of the state,

A man that many call of their life

That have to lost the boy look to’t.

- Regan

Sir, to my sister?

- Oswald

I pray you, have your hand: you may receive it all to his sorrage, and makes the heavens

Cassio lies that in the heart

That I may speak: I’ll wast …

While Desdemona disappears from the dialog, a few minor characters from “King Lear” (Gloucester, Regan, and Oswald) trickle into the scene. We have created other free texts starting from different lines in the three plays, and it is interesting to notice that characters from the tragedies “Othello” and “King Lear” mix often, while characters from the comedy “Much Ado About Nothing” seem to be more self-contained.

The text is generated using words from Shakespearean English and its characteristic complex sentence structure. Not all sentences make perfect sense, but most seem to. Notice also that minor characters are assigned shorter dialogs, while Othello and Cassio, in this example, get the longest speeches—as it should be.

Using AI to write an original scene

Let’s use the first 100 characters (again in red) to trigger a new scene. Here I introduced the keyword “SCENE,” the place, the involved characters, and the name of the first character to speak. The neural network does the rest.

SCENE I.

Venice. A street.

Enter Roderigo and Iago

- Desdemona

I pray, talk me of Cassio.

Ay, so I hear the write to prive and there,

That she would seen him that present so lich wored old wat,

and the best conscionable than in this revolumance against him:

There’s son against father,

and thy father’s son and the best of our time hath no exalse your counsel watch

The worst is not a tender to the warlike isle,

That sunded must needs take the shame

which the revenges of the self-same malk than the best of our times;

keeps our fortunes fend for bearing to a strength,

Sight in their natures, letting go safely by the rack: I swear ‘tis, to be so

That she will send back my messenger.

- Gloucester

I see the rust in the stocks.

- King Lear

What a trifore be some cartiou, I can tell my way

Than should be assurather, despise my brother;

That I have passed him, tell me I was every think of fear,

That she may be honest yet he hath confess’d in him entertains

and think the next way to stain it,

That the main commet in the least

Would fail her breath,

That she may ...

Creating a new scene proved to be a much harder task than continuing an existing one. Desdemona’s talk starts well, and it is consistent with the original play. However, I am not sure I can follow it to the end.

Using AI to mix Shakespearean and Modern English

But seriously, how useful is an AI engine that produces archaic English text?

Can we somehow improve current English sentences with a touch of Shakespeare? Let’s feed our Shakespeare-trained network some modern text and see what emerges.

So, what kind of everyday, modern text could do with a bit of Shakespearean improvement? A software license agreement? Let’s see how much more interesting the network can make the start of a license agreement (in red).

This License refers to version of the GNU General Public License. Copyright also means copyright-bick,

Remade me any thing to his sword

To his salt and most hidden loose to be so for sings, but not in a libutt of his matter than that shall be sure as will be soldye

As master compary, do not live in traitor.

Bless thy five wits!

-Kent

O pity!

Sir, where is the patience now,

That this is so far from the sea and some bidings to dismantle

So many folds of save and honest.

-Brabantio

I must not think the Turk of Cassio in the strange metting the cribles of a charmer be the reviling of libe to say

That I can deceive him to the best advantage,

In her prophetic fairs of a little to presently at your powers; whereof I thank you, sir.

-Albany

Gloucester, I will prove upancy of his sport and first accuriors and guard and talking on the white.

-King Lear

Where are the thief?

Thou shalt never have the captains at the letter

To the Moor and thing we have not the better shall be sure as worth if he be anger—

-Regan

I pray you, have a countend more than think to do a proclaim’d there of my heart, Hot

The words save, honest, thief, master, traitor, and deceive seem to fit the context. Notice also that the dialogue sprouting from the start text of the license agreement interestingly involves mainly minor, less tragic characters from the plays.

For the rest, the un-understandable parts are as un-understandable to me as the original legal language. And to quote our network, “Sir, where is the patience now, …”

What deep learning learned from Shakespeare

We have reached the end of this experiment. We have trained a recurrent neural network with a hidden LSTM layer to produce free text. What have we learned?

To sum up, the network was trained on the full texts of Shakespeare’s plays “King Lear,” “Othello,” and “Much Ado About Nothing.” It learned to produce free text in Shakespearean style. It just needed a 100-character initial sequence to trigger the generation of free text.

We have shown a few different results. We started with a dialogue between Othello and Desdemona to see how the network would continue it. We also made the network write a completely new scene, based on the characters and place we provided. Finally, we explored the possibility of improving Modern English with Shakespearean English by introducing a touch of Shakespeare into the text of a license agreement. Interestingly enough, context-related words from Shakespearean English emerged in the free generated text.

These results are interesting because real Shakespearean English words were used to form more complex sentence structures even when starting from Modern English sentences. The neural network correctly recognized main or minor characters, giving them more or less text. Spellings and punctuation were mostly accurate, and even the poetic style, i.e., rhythms of the text, followed the Shakespearean style.

Of course, experimenting with data set size, neural units, and network architecture might lead to better results in terms of more meaningful dialogues.