Writing the perfect marketing email takes time—often days. Personalizing it for 130,000 contacts? That seems impossible. Or is it?

I’m a data scientist, and I’m here to show you, it is, with GenAI!

With Generative AI (GenAI), you can analyze vast datasets in real time, uncover customer insights, and generate highly personalized email content at scale.

In this article, I'll show you how to build a data science application that:

- Accesses a database of 130,000 wine reviews (variety, location, winery, price, description).

- Uses natural language queries to extract insights (e.g., Which wine is most popular among women in California?).

- Generates customized email copy for each reviewer.

You’ll create this solution using the free, open-source KNIME Analytics Platform—no coding required! KNIME’s visual drag-and-drop interface makes it easy for anyone to automate personalized marketing at scale.

Download KNIME Analytics Platform and my example workflow to follow along.

Let’s get started! 🚀

Build a targeted marketing campaign using GenAI in KNIME

Let’s walk through the steps to build a GenAI workflow to optimize a targeted marketing campaign. You can download the workflow here to use yourself.

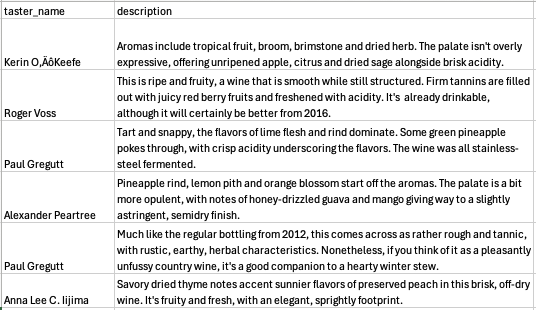

We use a dataset of over 130,000 wine reviews which includes attributes like reviewer name, description of wine, region, and price. This dataset is available for download here, and we’ll use it to identify and segment specific customer groups, allowing tailored marketing campaign outreach.

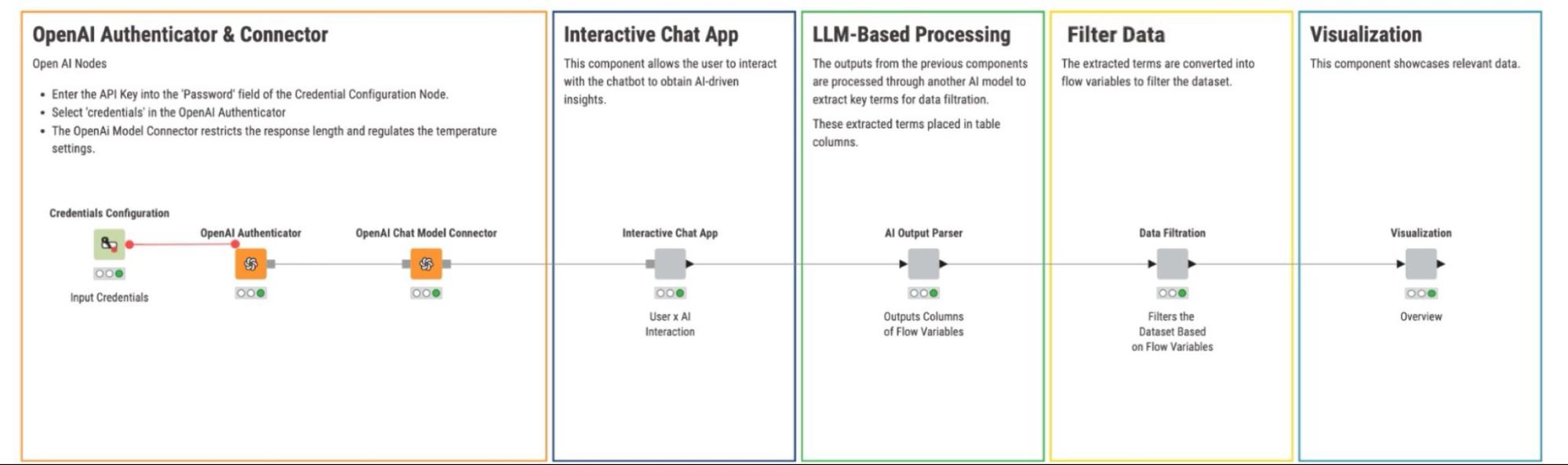

The workflow has five main components that take care of these steps:

1. Connect to an LLM model: OpenAI authentication and connection

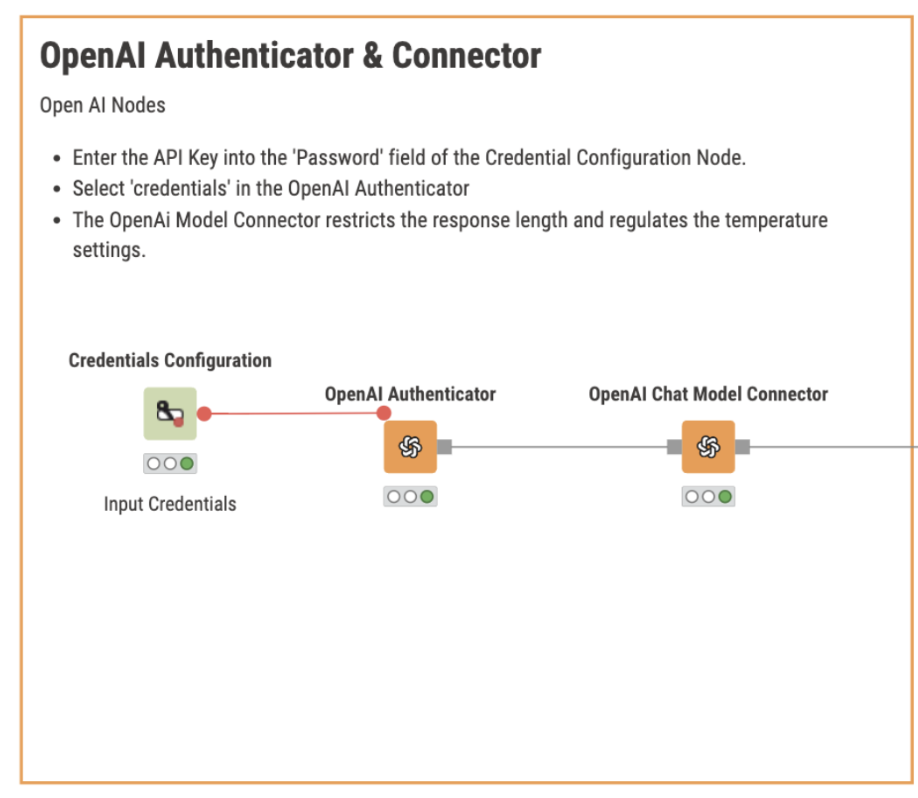

This step gives you secure access to the Large Language Model (LLM) model so that you can interact with the data. This step serves as a foundation for the entire workflow.

The generative AI extension in KNIME enables seamless connections to various large language models, including those developed by Azure, Giskard, and OpenAI. For this example, we use OpenAI. Trained on internet data up to 2021, OpenAI models are effective at providing targeted marketing insights and predicting customer behavior based on their training.

Before we can start generating insights, we first have to authenticate and configure the selected model—in this case, OpenAI’s GPT-3.5.

Here’s how the process works:

- Credentials Configuration node: Enter an API Key into the ‘Password’ field.

- OpenAI Authenticator node: Authorizes access to the model using the credentials from the Credentials Configuration node.

- OpenAI Chat Model Connector: Connects to the API and allows you to select a specific chat model, such as GPT-3.5.

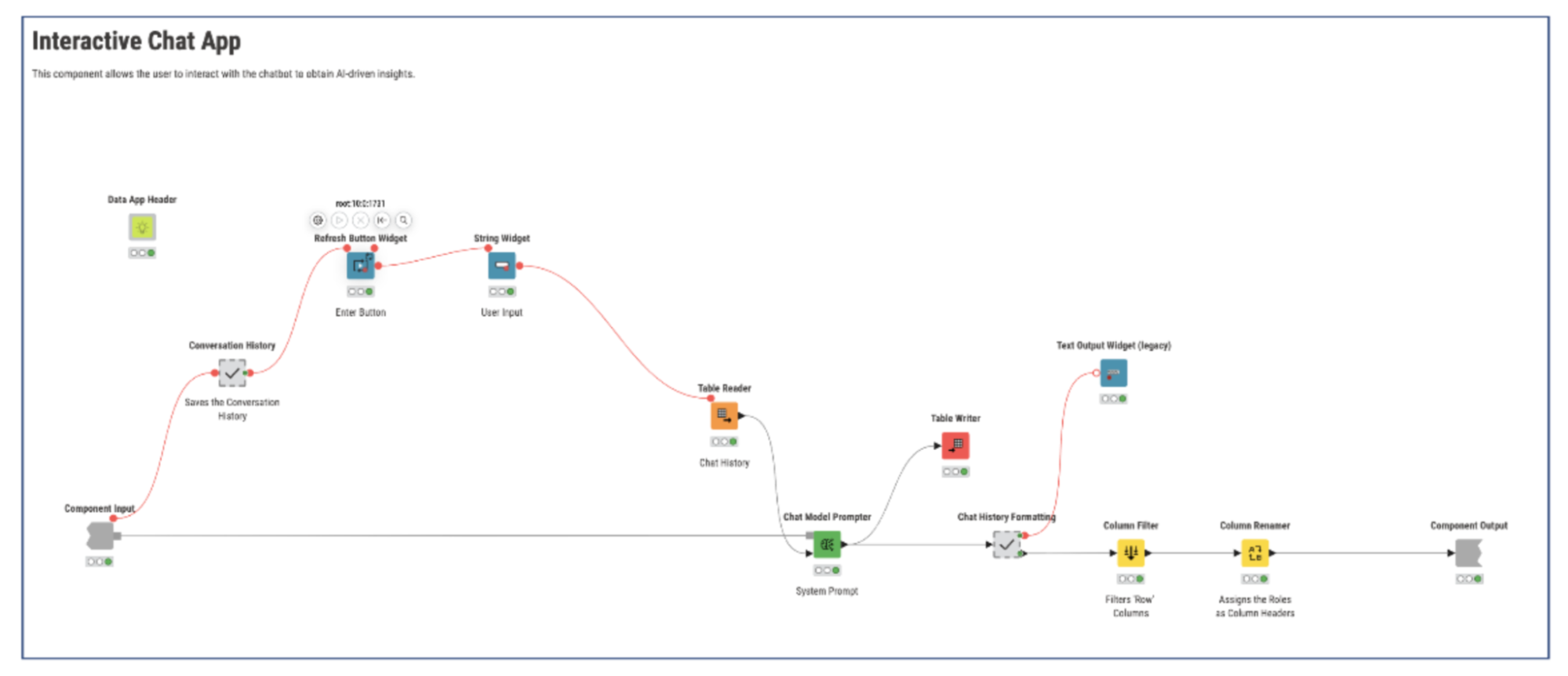

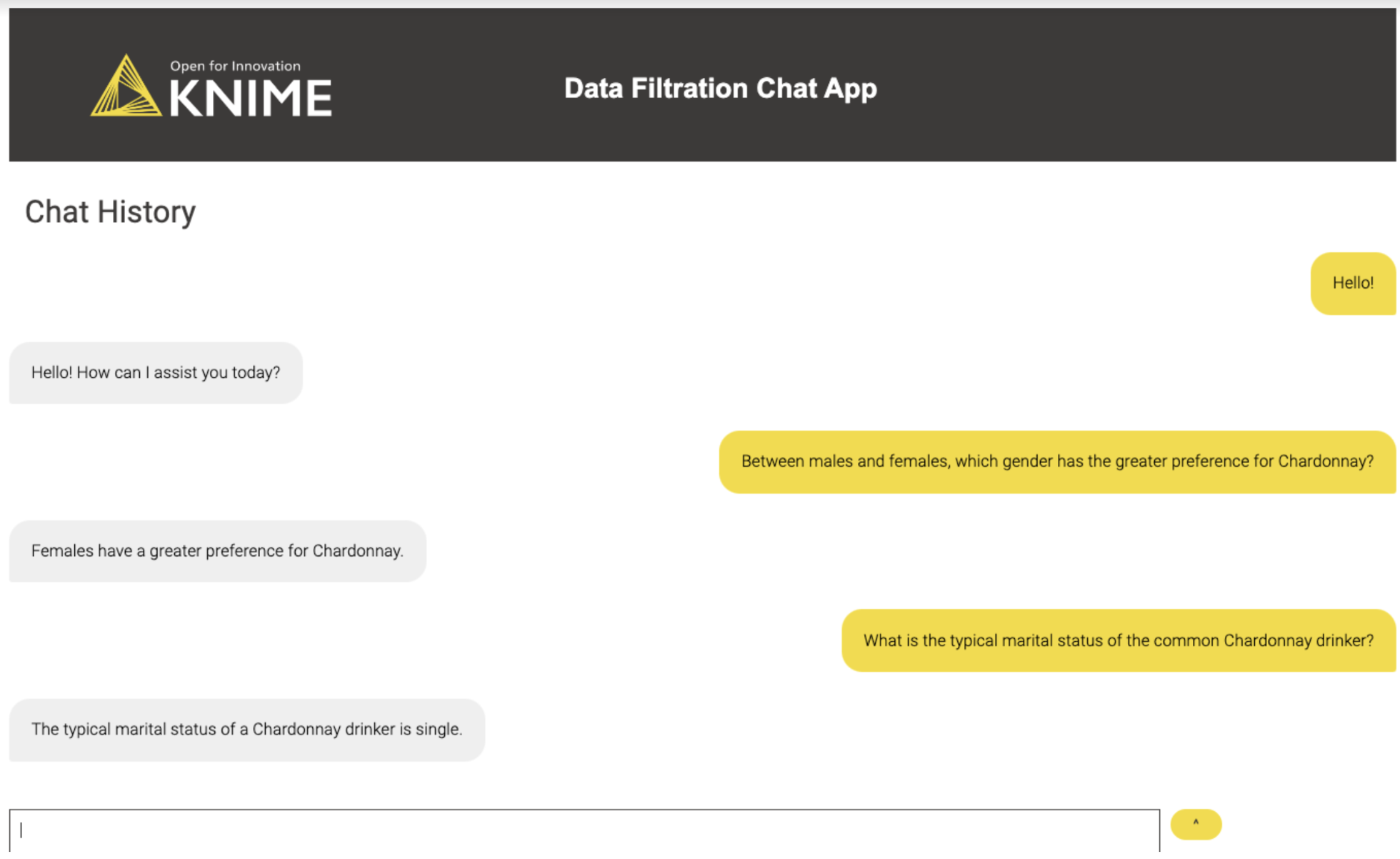

2. Interactive chat app

The Interactive Chat App component allows you to interact with an AI chatbot to get detailed insights into a target market.

By asking specific business-related questions—you can identify customer preferences or analyze market trends, the data-driven insight you need to build a relevant targeted campaign.

Inside the Interactive Chat App component, the Chat Model Prompter node processes user queries by taking both prompt and conversation history into consideration. It then generates a context-aware response based on both the current question and the prior conversation, allowing the AI's output to become increasingly specific as the interaction progresses.

This component simplifies the process of gathering customer insights without requiring complex analysis tools.

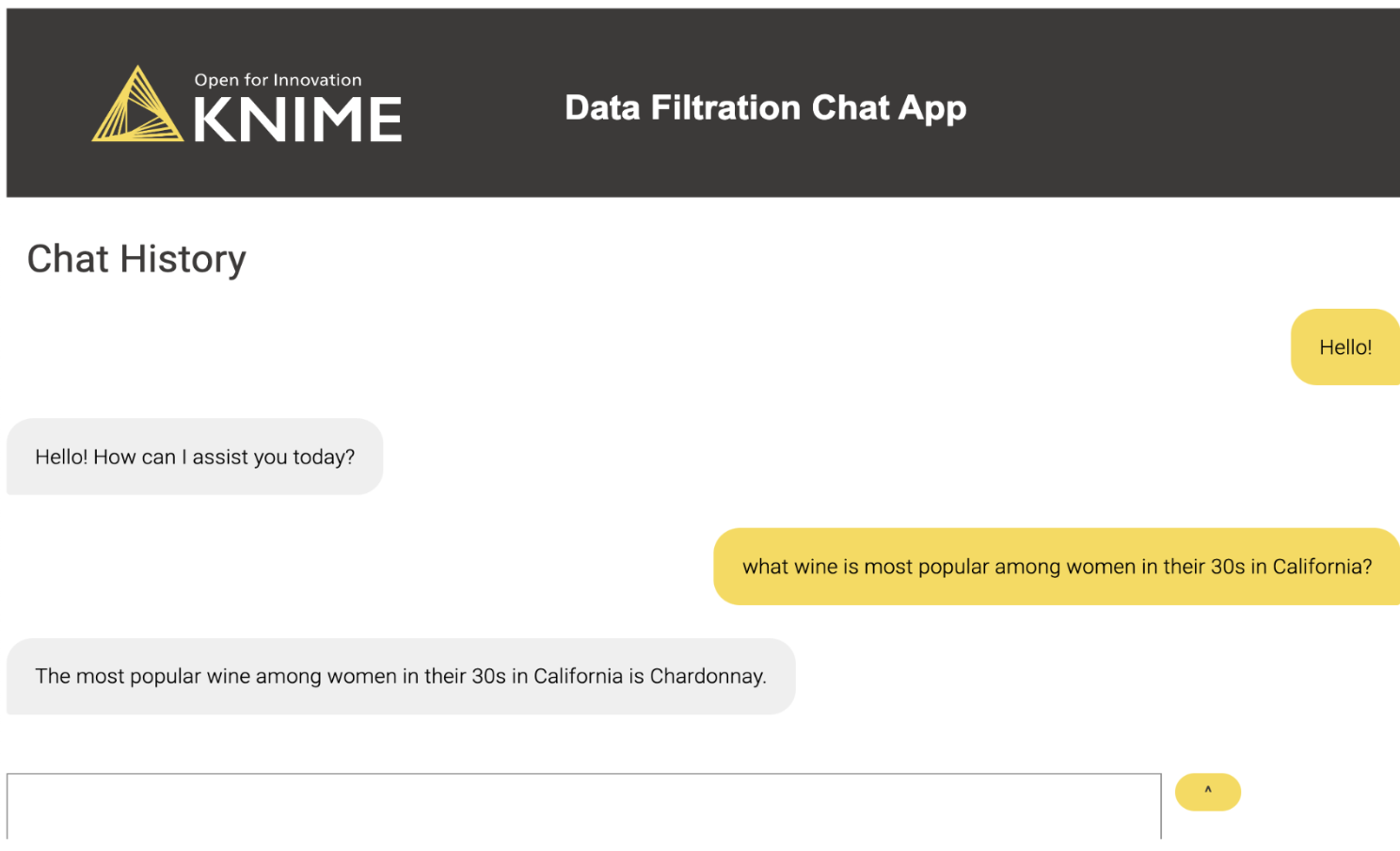

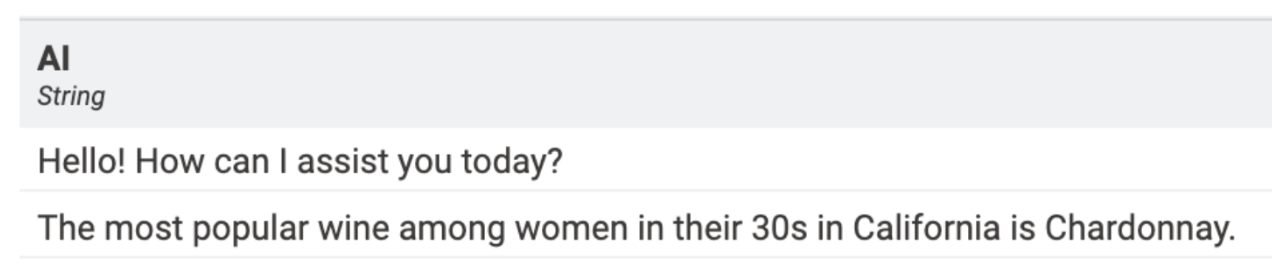

For example, a user could ask “What wine is most popular among women in their 30s in California?” and instantly receive actionable insights to guide marketing campaigns and product development.

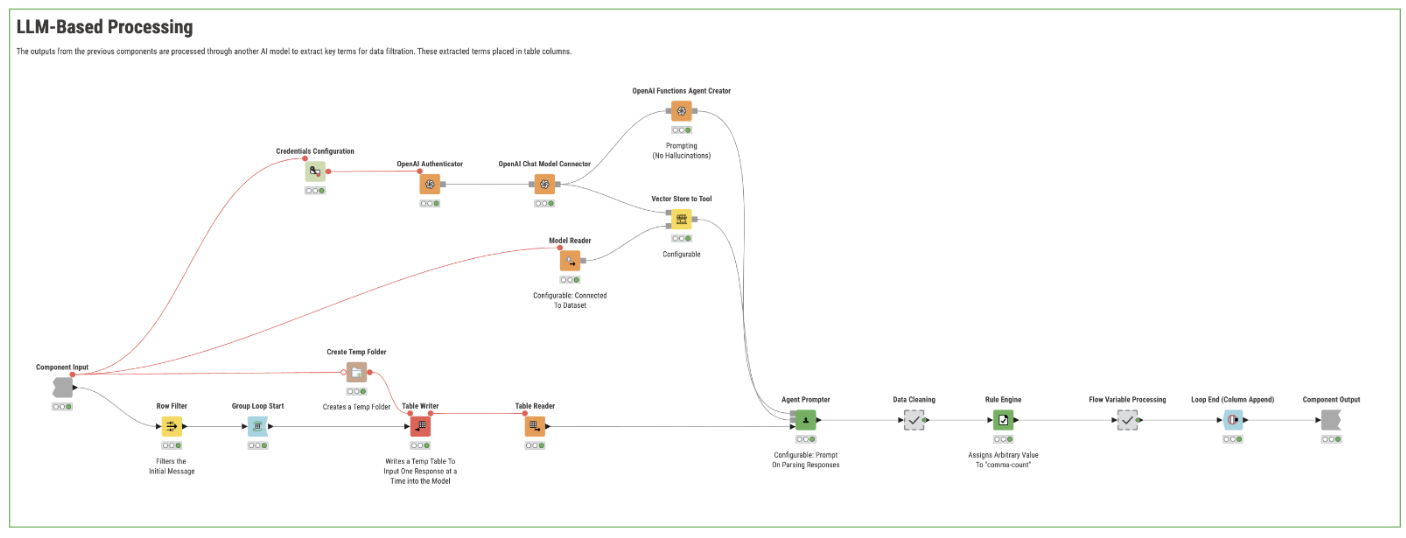

3. Easier segmentation with LLM-based processing

In this step we want to isolate specific market segments or customer preferences.

Here, the output from the Interactive Chat App component is processed through a second AI model that extracts key terms for data filtration. This model analyzes the responses from the chat app to identify flow variables that can help isolate specific market segments or customer preferences.

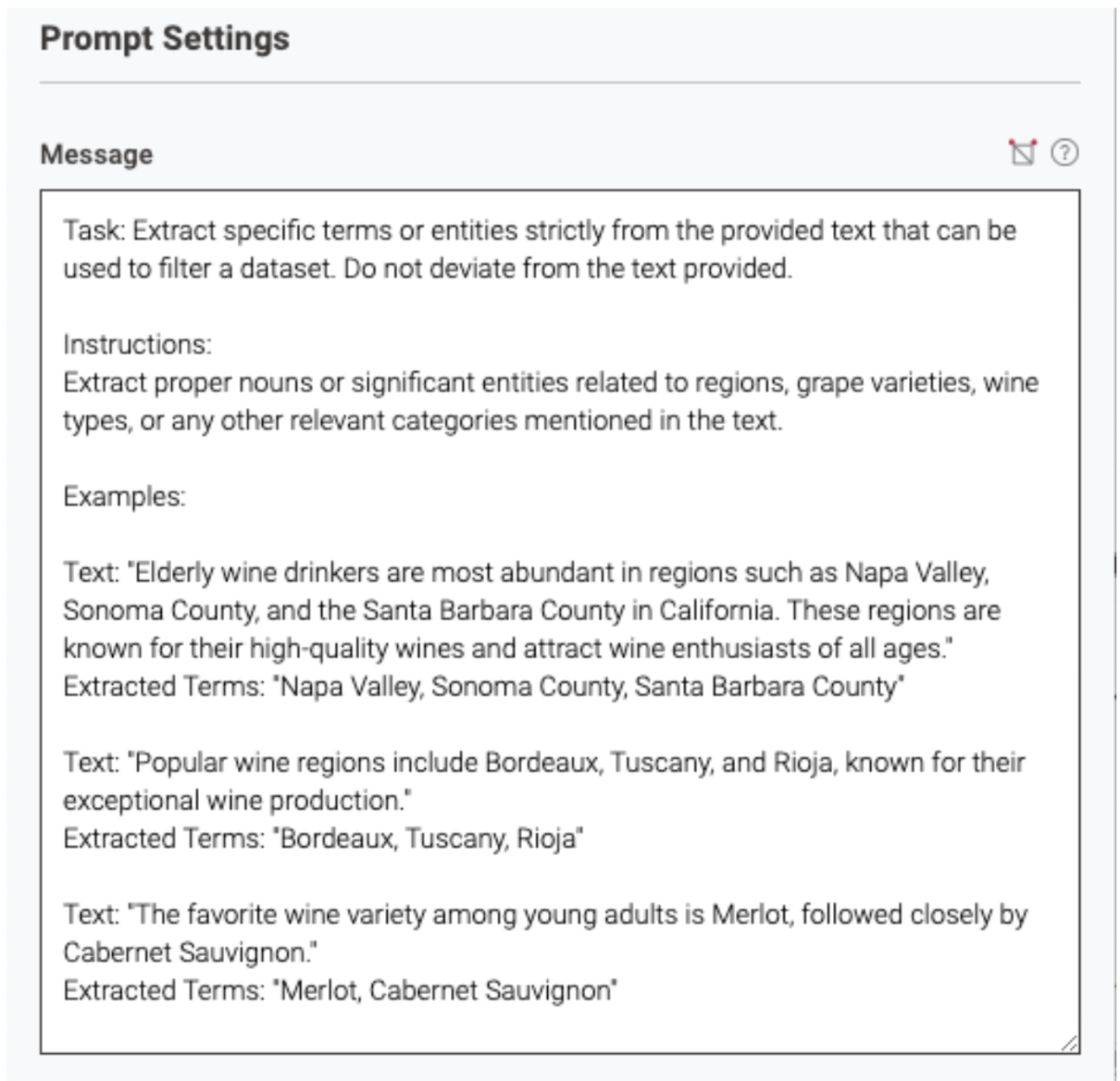

The core node responsible for parsing the responses is the Agent Prompter node. This node allows a prompt to be included in each iteration of text processing. This prompt guides the AI model to extract key terms from each conversation output generated by the chat app.

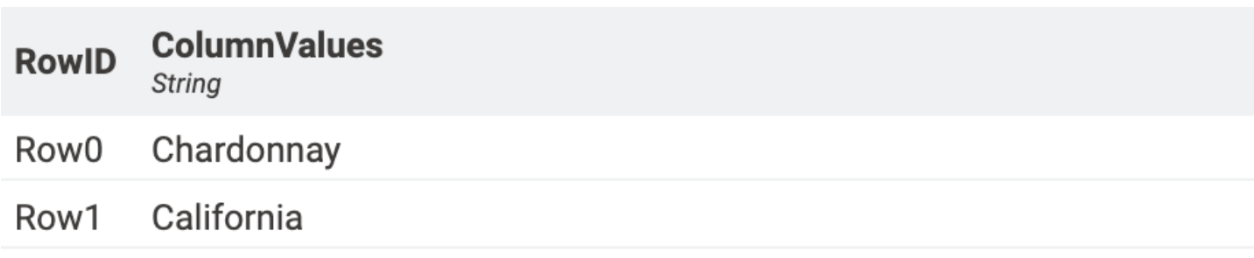

The extracted terms are then placed into table columns, organizing the data for easier filtration.

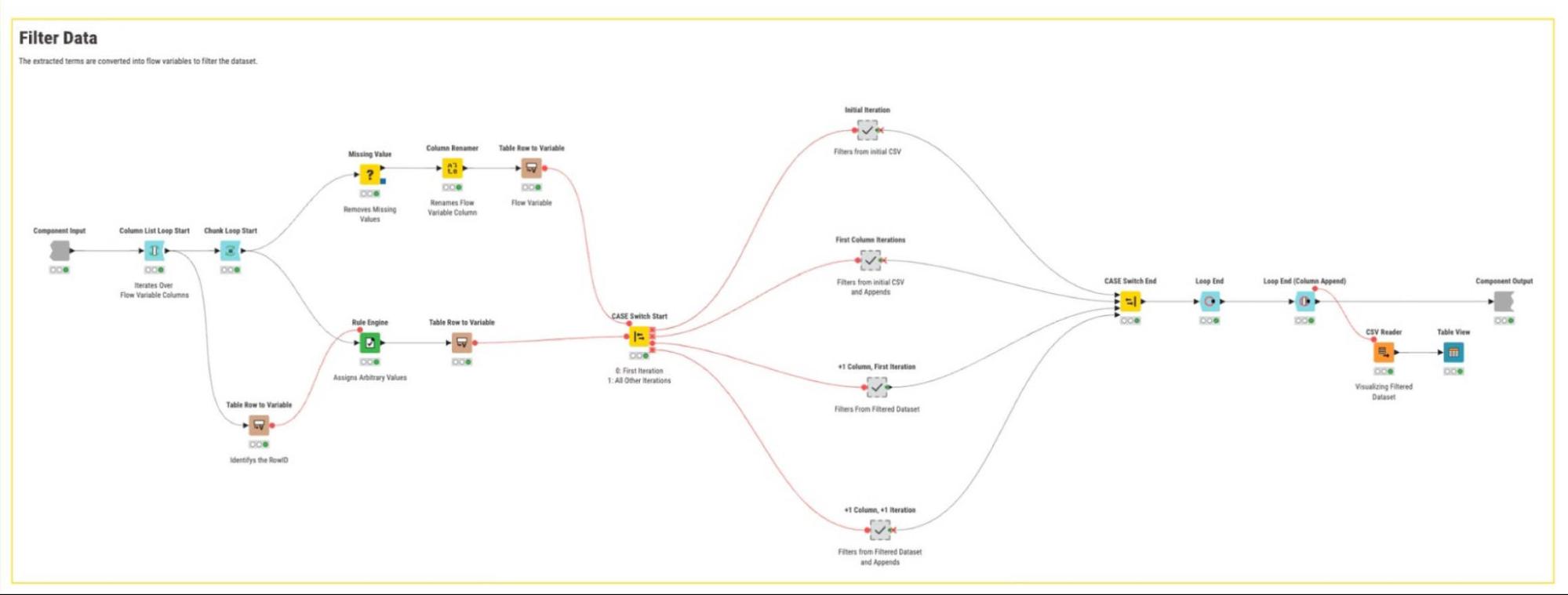

4. Filter data

The goal of this step is to begin with broad queries and gradually narrow down the dataset using more specific criteria.

We’ll use the extracted terms from the previous step to filter our dataset. The flow variables created in the LLM-Based Processing component represent key attributes that are relevant to the target market, such as customer preferences, behaviors, or demographics.

A double loop is used to parse through the dataset efficiently. The first loop (outer loop) ensures that we handle the flow variables generated from the AI output textbox from the previous component, while the second loop (inner loop) iterates over each individual flow variable within that output. In this example, the flow variables help identify customers residing in California who prefer Chardonnay, making them the target audience for our marketing campaign.

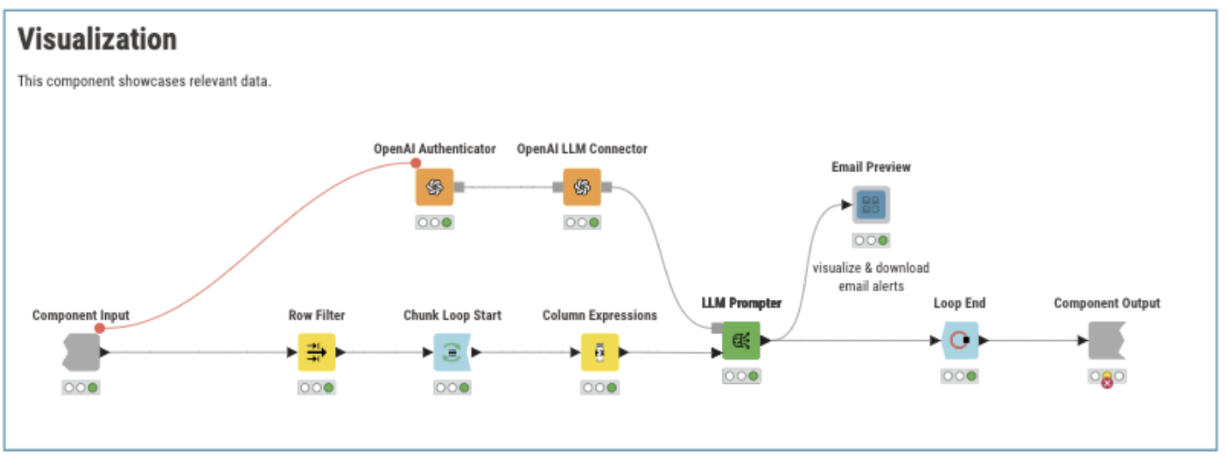

5. Visualization

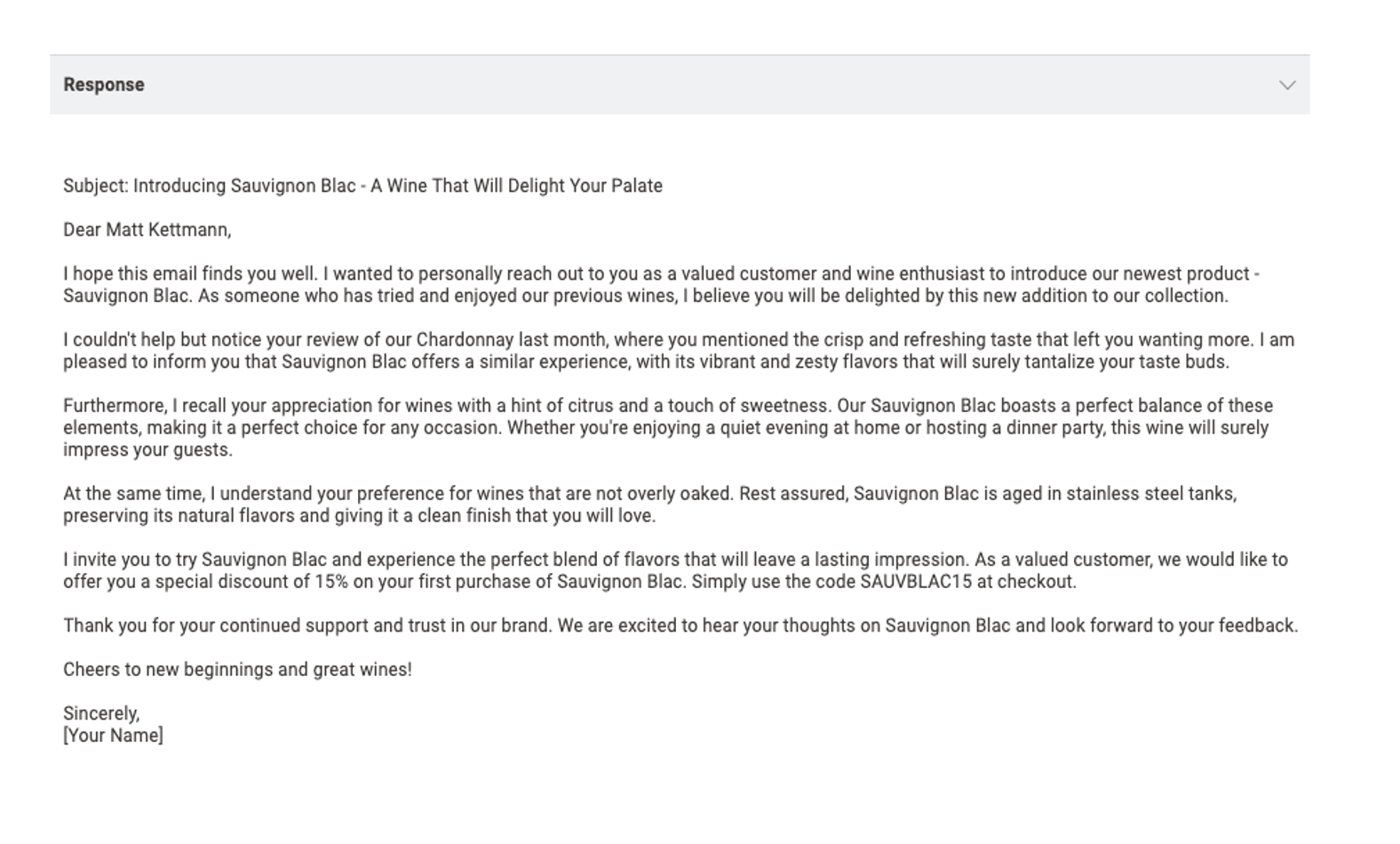

Here, we use generative AI to personalize email interaction with customers and present the results of the Filter Data component.

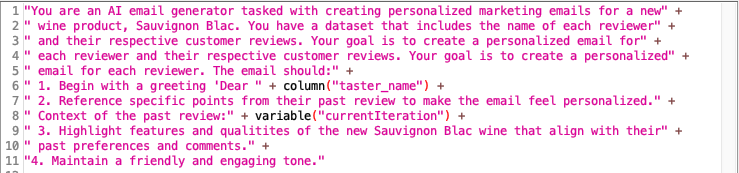

In this case, the LLM Prompter node processes customer reviews from the original dataset and generates personalized emails tailored to each individual within the target market segment.

The LLM prompter node is used in this component to generate personalized email content. Using GPT-3.5, the LLM Prompter sends a specific prompt for each row in the input table to the model and receives a corresponding response.

Importantly, this node does not store information from previous responses, which ensures that every customer receives an email based solely on the data provided through the dataset.

In the chat within the Interactive Chat App component, we identified that single women show a stronger preference for Chardonnay.

After passing this output through the Filter Data component, the dataset is segmented by both gender and marital status, allowing us to further refine our target audience.

Applications of GenAI in targeted marketing campaigns

Using GenAI alongside data filtration and segmentation allows us to create highly personalized marketing campaigns. By leveraging insights from LLMs, businesses can extract valuable information from market data, refine that data to isolate target segments, and generate tailored marketing content that speaks directly to the customer.

As AI technology continues to evolve, becoming smarter and more sophisticated with every data input, this workflow can easily be updated to incorporate newer AI models. This ensures that businesses remain competitive by using efficient personalized marketing strategies.

Download the KNIME workflow and explore using visual workflows for generative AI to garner customer insights, parse text, and generate targeted marketing campaigns.