Agentic AI is emerging as a new way for organizations to access and use their data. Instead of teams manually searching through documents, databases, or internal tools, agentic applications can answer questions directly by retrieving information, processing questions, and generating useful responses, all while adapting to company-specific needs.

An Ask-Me-Anything (AMA) agent is an application that responds to internal business questions by selecting the right tools and data sources behind the scenes. In this article, we show how we built one in KNIME. With the use of the Agent Chat View node, you can type questions into a chat interface, and the agent retrieves and structures answers by pulling data from multiple internal systems.

For example, you might ask: Do we have customers in Prague? What’s our history with Acme Corporation? Which customers have attended more than three webinars?

Our Ask Me Anything agent already handles a wide range of internal questions about customers, the community, KNIME users, and employees. As it gains access to more data and its logic improves, it becomes increasingly effective at retrieving and structuring relevant information.

This article breaks down how KNIME makes this possible and how you can build an agent like this for your own internal data.

We’ll cover:

What are the building blocks of an AI Agent?

Unlike static search engines, agentic AI applications analyze data, choose relevant sources, and generate clear responses. What makes it more than just automation is how the agent decides what to use and when.

It achieves this by using:

- Tools – Perform specific tasks like retrieving CRM data, summarizing text, or translating content. Some use GenAI for advanced capabilities. Tools are invoked by agents, which act as decision-making systems that determine which tool to use based on the context and user intent. The Workflow to Tool node enhances this by making it easier to convert workflows into callable tools, enabling agents to select and use these tools dynamically based on the task at hand.

- AI Workflow – Chain multiple tools together to complete more complex tasks. For example, a workflow might: retrieve customer data using a data aggregation tool, pass it to a summarization tool, translate the summary using a language tool, and finally send it via email.

- Agents – The Agent Prompter node in KNIME consolidates tool selection, invocation, and iteration into a single step, allowing agents to dynamically select and call tools, adapting to each request rather than following a fixed sequence. The Agent Chat View node allows for direct interaction with users, while agents can also operate in the background as REST services (agentic services), ensuring they provide real-time, context-aware responses.

This structured approach is central to how agentic AI operates within KNIME. For a broader overview, check out this article, Agentic AI and KNIME.

Building an Ask Me Anything agent

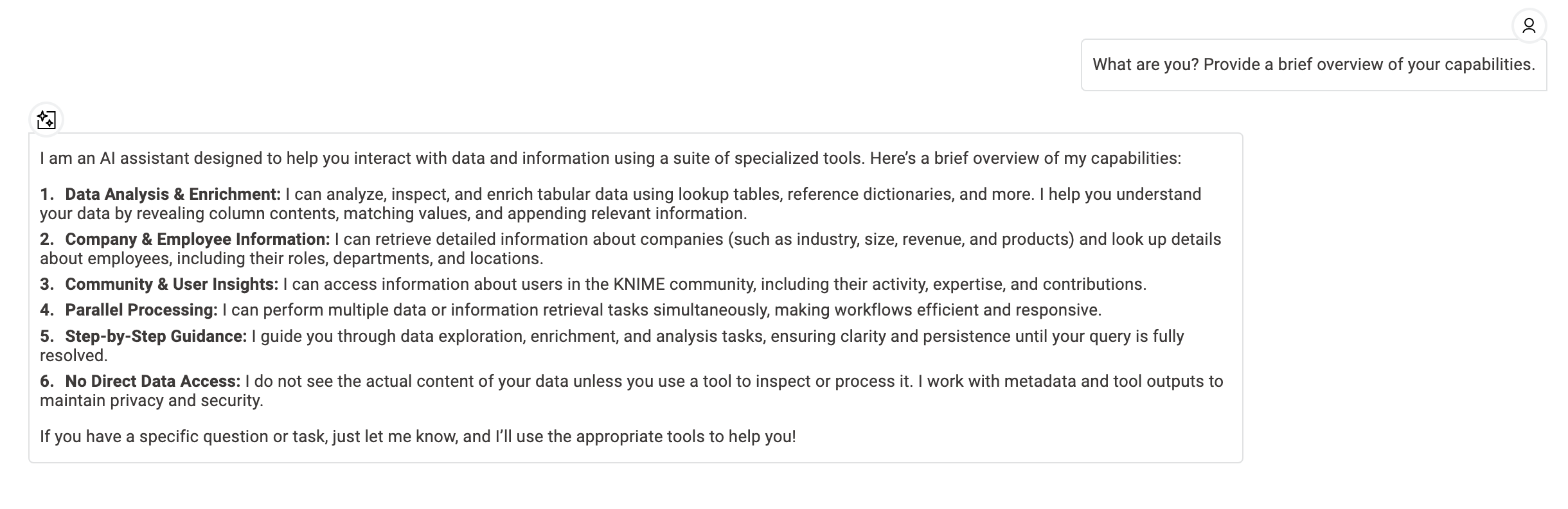

The agent is deployed as a KNIME Data App, a browser-based interface where users can type questions and receive immediate answers. The Agent Chat View node enables direct interaction with the AI agent, creating a conversational interface for real-time responses. This node improves the user experience by supporting natural dialogue, making the interaction more intuitive and effective.

Here's what it looks like:

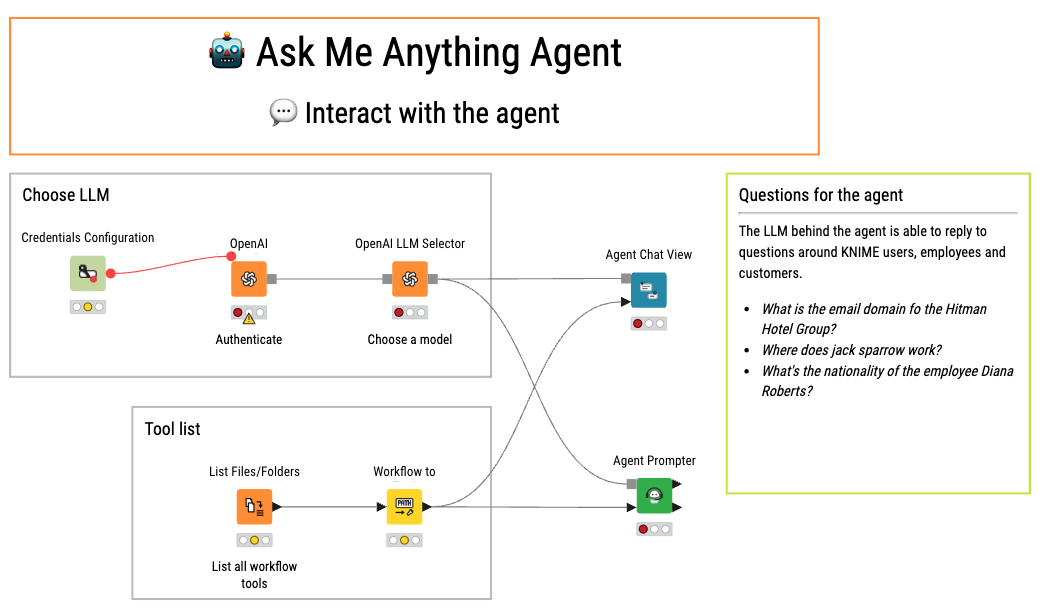

Behind the interface is a KNIME workflow that pulls everything together. Here’s what the agent workflow looks like:

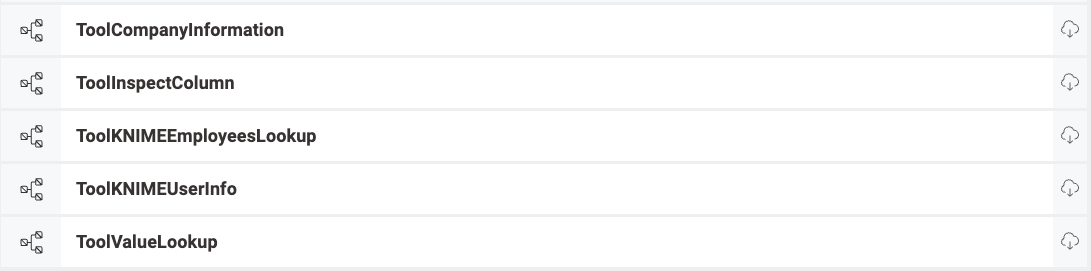

The tools the agent uses are stored on the KNIME Hub, which acts as a central repository of reusable workflows. Each workflow represents a specific capability, for example, retrieving information on: customer data, KNIME employees, top KNIME customers, or sending emails. These are the building blocks the agent can use to answer a user’s question.

Below you can see a space on the KNIME Hub containing the tools used in our application.

To access these tools, the workflow now uses the Workflow to Tool node, which simplifies converting workflows into callable tools. This allows AI agents to dynamically select and use tools based on the specific needs of each request. Keeping tools in the Hub makes the system easy to maintain and extendable. You can add new tools, or update existing ones, without needing to modify the agent logic itself.

Once the tools are available, the process moves to the Agent Chat View node, which enables direct, conversational interaction with the user. This component allows the agent to engage with users in real time, answering questions in a conversational format. Instead of relying on fixed rules or static scripts, the Agent Chat View node is designed to dynamically manage the flow of interaction and provide responses tailored to the user's input.

The agent doesn’t generate answers on its own, it selects the best tool for the question and executes it to retrieve relevant information.

How the agent is instructed: Building the prompt

How you describe the agent’s world matters because that shapes how it responds. The prompt controls everything. That’s where the agent gets its instructions, a kind of briefing before it answers the question. The prompt tells it: These are the tools you have. This is what each one does. Here’s how to use them. This is who you are. And here’s the question you need to answer. With all of that context, the agent figures out the best way to respond. This full prompt is assembled dynamically inside the Agent Prompter node, which pulls in tool descriptions from the Hub, system instructions, and the user’s question in real-time.

There are two common approaches:

- Technology-centric: listing specific systems like “Salesforce” or “Zendesk” and explaining what each one does. That might look something like:

But that approach can quickly become fragile. If the underlying technology changes, say, your company switches from Salesforce to another CRM, then you’ll need to update every prompt that references it.

- Information-centric: A more robust approach is to make the prompt focus on what kind of information the tool provides, not how it’s implemented. That same part of the prompt might then say:

This version is clearer, easier to maintain, and more aligned with how the agent navigates tools: not in systems, but in answers.

With the Agent Prompter node, assembling and dynamically updating the prompt becomes easier and more flexible. The node ensures that the agent can adapt to different tools and data sources in real-time, improving the overall responsiveness and accuracy of the agent’s answers. Let’s take a closer look at what one of these tools actually looks like.

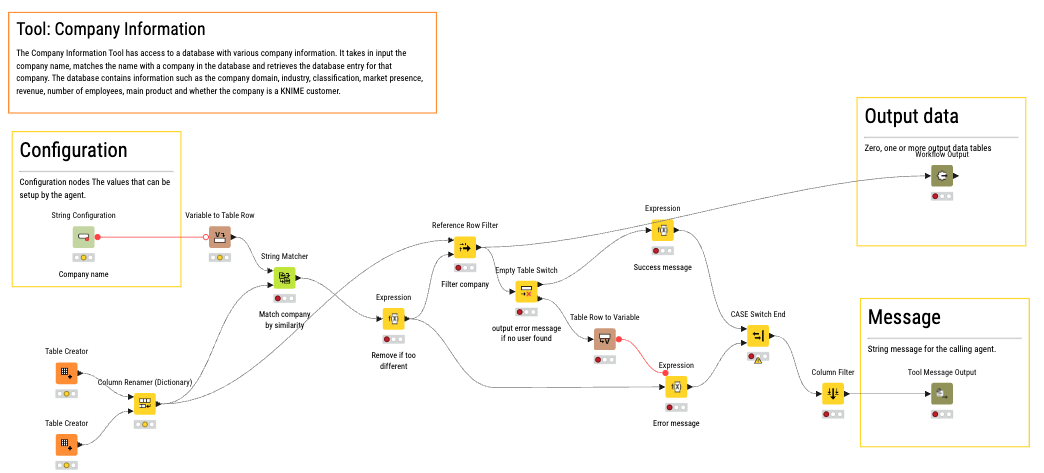

Build a company information tool

This tool is built as a KNIME workflow that accesses a company database to retrieve detailed company information. It takes the company name as input, matches it with entries in the database, and retrieves associated data such as the company's domain, industry, classification, market presence, revenue, number of employees, main product, and whether the company is a KNIME customer.

The KNIME workflow behind the Company Information Tool ensures that company data is easily accessible and up-to-date. If the database or data sources change, only the connector needs to be updated, keeping the logic intact and the workflow adaptable.

Most organizations already have dozens of technologies, APIs, and data silos. KNIME helps teams integrate those systems and create a unified view of the data people actually need. That same integration layer becomes the foundation for building data-aware tools

Once you have these tools, you can pair them with large language models to build intelligent agents. The KNIME Hub plays a key role here too. Not only does it help manage and organize tools, but it also adds safeguards, like limiting which AI models a tool is allowed to use. You can define which LLMs are allowed based on data sensitivity or trust level and enforce it through Hub-level policies. That control matters when you're dealing with real enterprise data.

How this works in practice

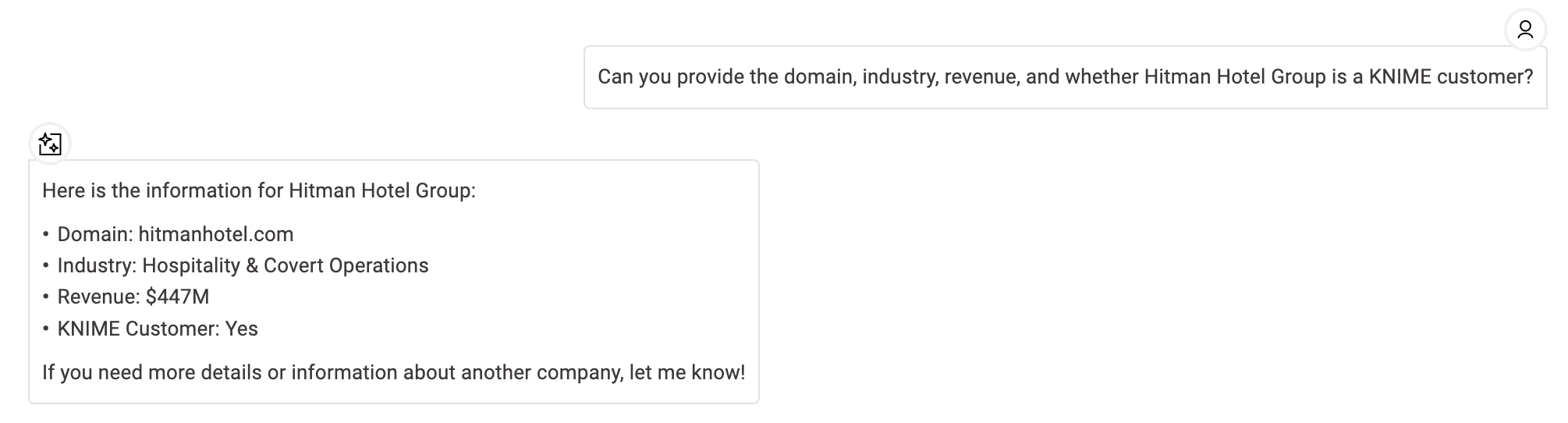

Now that we’ve outlined the building blocks, tools, and the Agent Chat View node used in this application, let’s see how the workflow operates in a real scenario.

Let’s say a team member needs a quick overview of a company and weather the company is a KNIME customer. This kind of information is usually scattered across Salesforce, shared drives, and account notes. Instead of manually searching through these data sources, the team member submits a query into the agent’s chat interface.

The agentic application identifies the type of request and determines which tools are needed. In this case, the Company Information tool is used to retrieve key company details, such as the company domain, industry, revenue, and whether the company is a KNIME customer.

Additionally, if the request involves matching company information with other reference data, the Value Lookup tool could be used. For example, it could match customer IDs with a reference dictionary to retrieve contact details or other relevant business insights.

By using these tools, the agent quickly retrieves and organizes the relevant company and employee information, offering a structured summary of KNIME’s relationship with the company. Instead of spending time searching through multiple data sources, the team gets a fast, reliable summary, all generated by the agent in real-time.

Getting Started with KNIME for Agentic AI

With KNIME, you can adapt and scale this approach to fit your unique needs. Whether it’s retrieving customer insights, internal policies, technical documentation, or other enterprise knowledge, the same agentic AI principles apply. By adding new tools and refining the workflow, you can create a more capable and adaptable internal data retrieval system.

The example in this article is just one starting point. With the right tools and logic, AI agents can go far beyond internal search and automate decisions, adapt to complex tasks, and scale across different domains. Similar principles can support a wide range of use cases, like content compliance. With every new tool, the agent gets closer to answering what matters, without anyone needing to dig for it.