Programming is fun. At least many aspects of programming. What's usually not considered funny is writing documentation and... testing. For the former I agree, but I will show you that the latter can also be fun. Even (or especially) with KNIME, and even more with some of the nice additions to the testing framework in 2.10.

Although most programmers don't like writing test cases very much, it's an essential part of software development and not seldom takes more time than solving the actual problem. The more essential it is to make testing as easy and convenient as possible. This is especially true if you are testing components in a larger framework such as

It's not easily possible with standard testing approaches such as JUnit, because a node relies on the KNIME Core framework that handles tasks such as execution and data handling. Therefore a node must be tested within a running KNIME instance.

The straight-forward approach is to use a "normal" KNIME workflow that executes the node with different combinations of input data and settings and checks whether the outputs are as expected. I don't want to go into detail how a decent test workflow is built and what additional nodes are available for checking results, this is extensively described in the "Testing Framework" manual that you get when you install the "KNIME Testing Framework" (look in plugins/org.knime.testing_x.y.z/Regression Tests.pdf). Instead I want to highlight some nice improvements in KNIME 2.9 and 2.10 that make testing much easier than before.

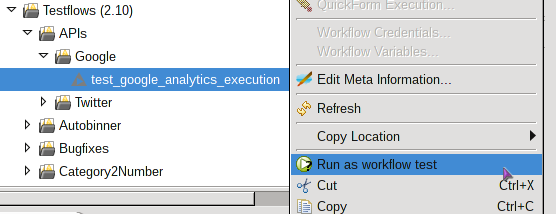

Before KNIME 2.9 there was a separate Eclipse application with which you could execute test workflows. This was quite complicated and you needed to restart the application every time you changed something on your code or in the test workflow. Since 2.9 you can execute test workflows directly within KNIME. If you right-click on a workflow in your local workspace, you can select "Run as workflow test".

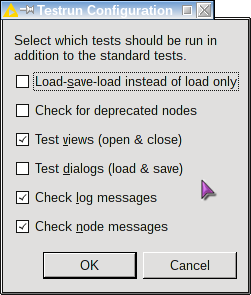

KNIME will ask you which sub-tests you want to execute (just stick with the default for now) and then immediately execute the workflow.

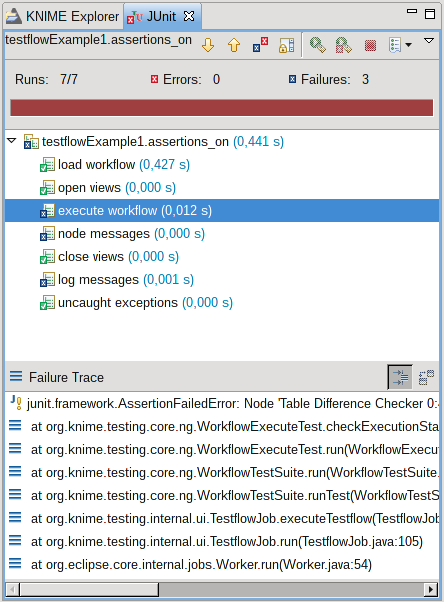

The results will be recorded and displayed in the Eclipse's JUnit view.

You can then inspect the failures or errors, modify the code, and if hot code replacement works as expected immediately re-run the test. One nice improvement in 2.10 is that you can run several test workflows at once. Just select a set workflows or a workflow group and "Run as workflow test". All contained workflows will then be executed one after the other. Workflows with failures will stay open for further inspection, successful workflows will be closed automatically, and the JUnit view will show the results for all workflows.

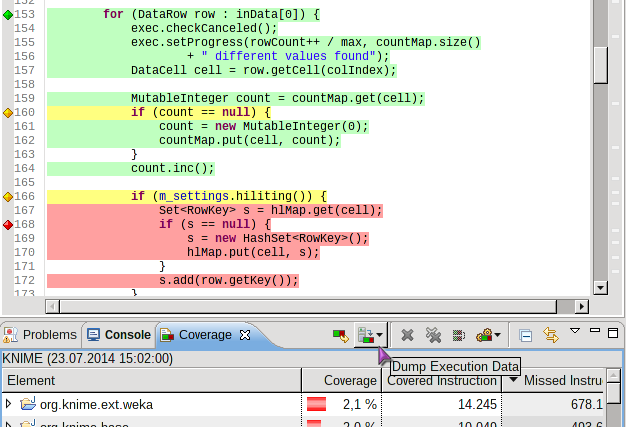

If you have a closer look at the dialog that opens before execution you will see that a test run consists of several sub-tests that you can switch on or off. You can choose, for example, to open all views (if there are any) before execution, leave them open during execution, and close them afterwards. The test framework checks if there are any problems while updating the view during or after node execution. Some tests are mandatory, such as the actual execution of the workflow, but also - and this is new in 2.10 - that highlight events are fired after execution. For each output table of every executed node in the workflow some rows are automatically first highlighted and then cleared again. In combination with the views test this makes automated discovery of bugs in hilight handling much easier.

One difficulty still remains (which is true for testing in general): how do I know if my test case is sufficient, i.e. it tests all aspects of the implementation. Fortunately there is an easy and extremely convenient solution called EclEmma/JaCoCo. JaCoco is a light-weight code coverage agent that can instrument every Java application on-the-fly and record which code lines have been executed and which haven't. EclEmma is an Eclipse plug-in that renders using JaCoCo super easy.

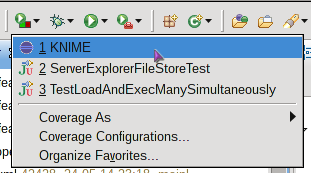

If you install EclEmma into your Eclipse SDK you get a new run mode called "Coverage" (in addition to "Run" and "Debug"). Just start KNIME with "Coverage".

and EclEmma will record every single line of code that is executed. It will slow down execution a bit, but not as much as one initially fears. Now execute a test workflow and create an execution data dump in the "Coverage" view in Eclipse.

This takes a few seconds and then your Java code editor will suddenly get really colorful. The more green lines you see, the better. This means this piece of code was executed and therefore tested. Red lines indicate that your test case is probably not sufficient yet and yellow lines usually occur with conditional statements where not all of the possible alternatives have been executed. Now you can updated your workflow, add some more tests, re-run the workflow, and check the coverage again - without having to restart.

Of course, this kind of testing has to be taken with a few grains of salt: Even fully "green" code can still contain errors because JaCoco "only" reports branch coverage and not path coverage. Also exception handling is very hard to test and interactive parts of a node such as the dialog or the view currently cannot be fully tested by the framework at all. Only some basic tests (e.g. no exceptions are thrown) are possible. But still, once you start writing testflows for your nodes using the techniques presented here, you may notice that testing can indeed be fun. And if you discover bugs before your users find them you did not only turn them into happier users but you probably also saved yourself some time. Which you can invest into even more testing.