Data Enrichment, Visualization, Time Series Analysis, Optimization

There has been a lot of talk about the Internet of Things lately, especially since the purchase of Nest by Google, officially opening the run towards intelligent household systems.

Intelligent means controllable from a remote location and capable of learning the inhabitants’ habits and preferences. Companies working in this field have multiplied over the last few years and some of them have been acquired by bigger companies, like SmartThings by Samsung for example.

However, it is not only households that can be intelligently interconnected: cities – i.e. smart cities – represent another area for the application of the Internet of Things. One of the first smart cities around the world has been Santander in the north of Spain. Sensors have been installed around the city to constantly monitor temperature, traffic, weather conditions, and parking facilities.

The Internet of Things poses a great challenge for data analysts, on the one hand because of the very large amounts of data created over time and on the other because of the algorithms that make the sensor-equipped object (house, or city) capable of learning and therefore smarter.

We at KNIME decided to take up this challenge and put KNIME to work to:

• collect the large amount of data generated by the Internet of Things sensors;

• enrich the original data with responses from external RESTful services;

• transform the data into a more meaningful set of input features;

• apply time series analysis to add some intelligence into the system;

• optimize the smarter system to get the best performances with the leanest feature set;

• integrate a number of different visualization tools, from R graphic libraries to Open Street Maps and network graphs, into our KNIME workflow.

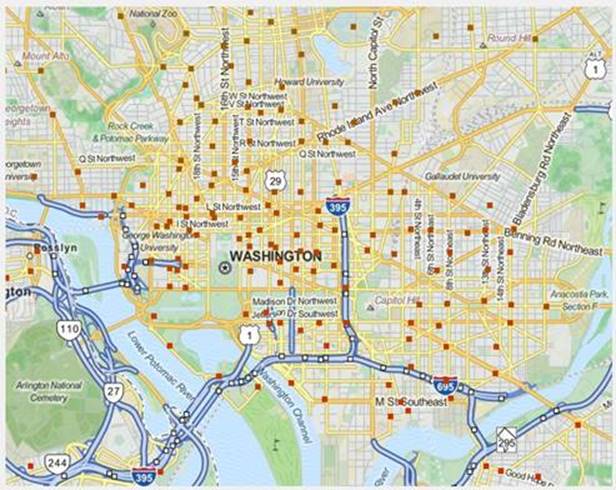

This project focuses on the data from a bike share system in Washington DC, called Capital Bikeshare. Each Capital Bikeshare’s bike carries a sensor, which sends the current real-time bike location to a central repository. All historical data are made available for download on Capital Bikeshare’s web site. These public data have been downloaded and used for this study.

The downloaded data have also been enriched with topology, elevation, local weather, holiday schedules, traffic situation, business locations, touristic attractions, and other types of information widely available on the Internet via web or REST services. After transformation and the application of machine learning algorithms for time series prediction, an optimized alarm system can give us a warning when bike restocking is necessary at a given bike station in the next hour.

The results of this work, together with whitepaper, data, and workflows, have been made available in the KNIME whitepapers pool at http://www.knime.org/white-papers#IoT.

In this kind of cutting edge problems, where a very large amount of data is generated, it is imperative to adopt a scalable approach that can grow together with the application in future. A scalable approach means not only handling bigger data faster, but also reaching out to new external data sources, integrating different complementary tools to refine the analytics with the newest emerging algorithms and techniques, and collaborating within the analyst team to exploit the group’s collective competence. Only an open architecture can provide such a flexible environment, to expand and change the tool bench in unpredictable ways over the future.

The Internet of Things is a very good example of the data explosion that is happening in most fields, from social media to sensor-driven processes. But how much information can more data actually convey? This is of course highly dependable on the amount of intelligence we apply to it. Pure data plumbing and systematization do not generally produce more intelligent applications. Only the injection of data analytics algorithms from statistics and machine learning, can make applications capable of learning and therefore ‒ smarter.