Ángel Molina, data analyst, consultant, and supportive member of the KNIME community, discusses the Large Language Models you can use with KNIME's AI extension, tips on managing vectors, plus examples of applications for Natural Language Processing and vectors with KNIME.

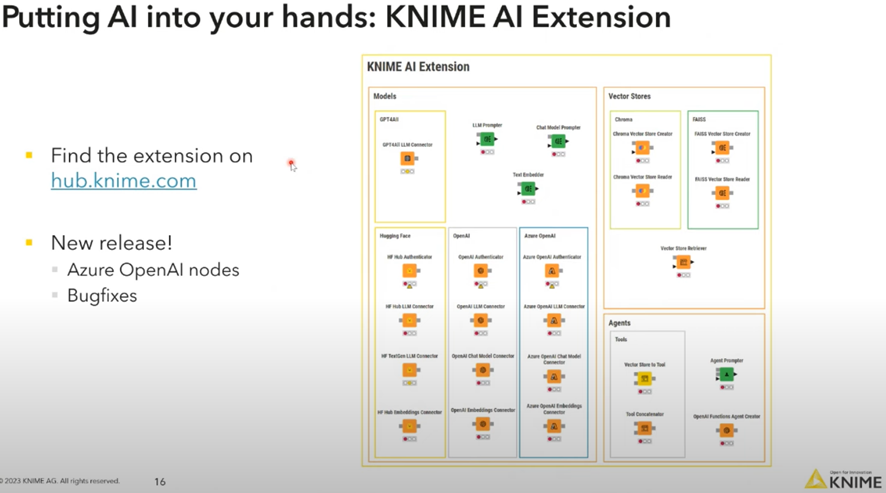

Natural language processing (NLP) is constantly evolving and changing the way we interact with text and text-based data. KNIME's AI extension is opening up new possibilities in text processing, by enabling us to implement Large Language Models (LLMs) and leverage technologies like Azure OpenAI Service without having to code, all from its visual interface.

LLMs in KNIME

Let's start by highlighting the LLMs that can be integrated into KNIME. For those who follow artificial intelligence (AI) development closely, names like OpenAI, Azure Open AI, Hugging Face and GPT4All are familiar. These models, which include ChatGPT, GPT-3, GPT-4, and Codex, represent a gateway to advanced text comprehension and generation.

Open AI

You likely don’t need a summary of OpenAI, but just in case:

OpenAI is an AI research organization based in San Francisco, California. Founded in 2015, its focus is on the development of AI with an emphasis on safety and benefit to humanity. Its mission is to promote transparency, ethics and security in the field. OpenAI is known for its work in creating advanced language models, such as GPT-3 and GPT-4, which have demonstrated exceptional performance in NLP tasks. The organization publishes much of its research, and actively collaborates with the scientific community in AI research and development.

In addition to research, OpenAI offers AI-related services and products. Its technological advances have applications in various industries, from healthcare to content generation and machine translation.

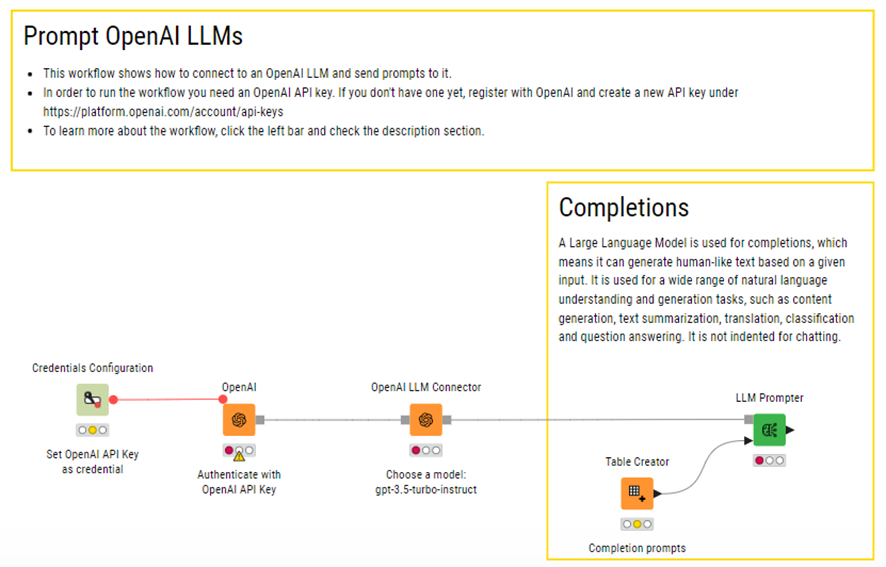

You can easily download and run the workflow directly in your KNIME installation. We recommend that you use the latest version of KNIME Analytics Platform for optimal performance.

To run the workflow, you need an OpenAI API key. If you don't have one yet, register with OpenAI and create a new API key at https://platform.openai.com/account/api-keys.

OpenAI will deprecate models on a regular basis, so take a look at https://platform.openai.com/docs/models/gpt-3 for information on available models. For OpenAI pricing information, visit https://openai.com/pricing.

You can connect an OpenAI LLM and send prompts to its workflow. Once you have your OpenAI API key, you’re set to download the workflow from the KNIME Hub.

Hugging Face

Hugging Face is an AI-based NLP company. Founded in 2016 in Brooklyn, New York, its open-source platform, Hugging Face Hub, houses an extensive collection of pre-trained AI models. These models are essential for NLP tasks such as text generation, machine translation, sentiment analysis, and more. Hugging Face’s high-quality models are based on cutting-edge architectures such as BERT and GPT. These models are customizable to meet the specific needs of businesses.

Recently, Hugging Face entered a collaboration with Amazon Web Services (AWS) to expand the availability of its cloud models.

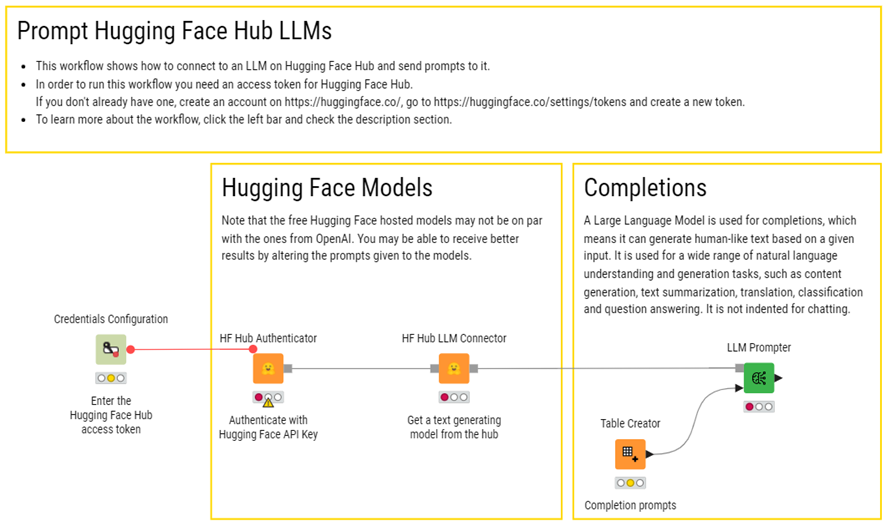

To run this workflow directly in your KNIME installation, you will first need an access token for Hugging Face Hub.If you don't already have one, create an account at https://huggingface.co/. Then, go to https://huggingface.co/settings/tokens and create a new token.

This workflow uses the "HuggingFace/zephyr-7b-alpha" model. Text generation models can be found on Hugging Face Hub (see external resources).

Models must be able to use the inference API.To connect to an LLM on the Hugging Face Hub and send prompts to it, download the example workflow.

Azure OpenAI Service

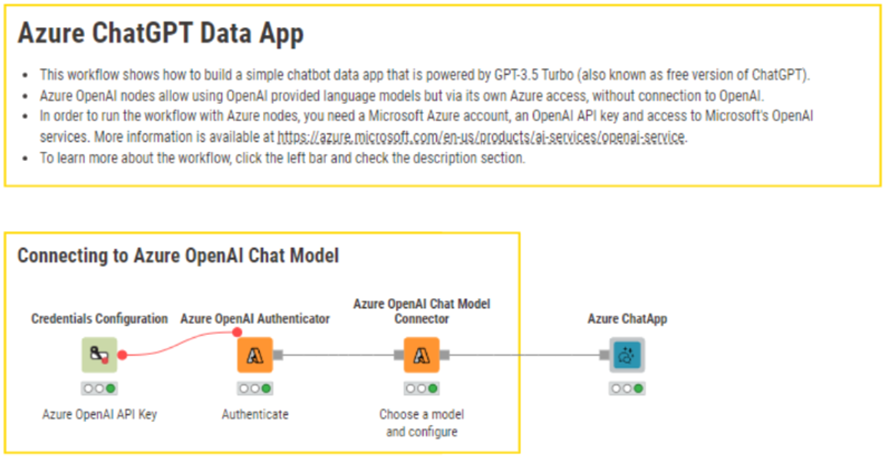

Azure OpenAI Service is a Microsoft offering that provides access via a REST API to OpenAI language models, including GPT-4 and GPT-3.5 Turbo. These models allow companies to use advanced NLP for tasks such as content generation, summarization, semantic search, and natural language-to-code translation.

Azure OpenAI Service offers security and content filtering benefits, making it ideal for enterprise applications that require advanced language processing with risk mitigations. The available models allow companies to create intelligent applications that can understand and generate text in more sophisticated ways.

This service allows businesses to harness the power of OpenAI models within their Microsoft Azure infrastructure, ensuring security and business compatibility. It is a cutting-edge technology solution that can power business applications based on AI and NLP, providing greater value and functionality to companies across sectors.

To run workflows with Azure nodes, you'll need a Microsoft Azure account. Azure OpenAI provides you with the necessary keys to access OpenAI services when you deploy a resource via Azure OpenAI. This collaboration facilitates the connection between your KNIME platform and the powerful tools ofAzure OpenAI Service.

To easily run the workflow with Azure nodes directly in your KNIME installation, you need a Microsoft Azure account, an OpenAI API key, and access to Microsoft OpenAI services. More information is available at Microsoft’s Azure OpenAI Service page.

If you’d like to try connecting to Azure OpenAI nodes, download the example workflow.

Vectors in text processing: in a nutshell

In text processing, "vectors" are numerical representations of data used in mathematical operations and analysis. Each text element is mapped to a vector that captures certain features or properties. These vectors allow mathematical operations and analysis to be performed on text, which is essential for tasks such as information retrieval and semantic search.

Using embeddings in text processing

Embeddings are a specific type of vector used to represent words in a vector space in a way that captures the semantics and relationships between them. These embeddings are generated using machine learning and NLP techniques. Unlike static vectors, embeddings are specifically trained so that similar words have similar numerical representations in the vector space. Nowadays, it's common for entire documents to be embedded instead of individual words.

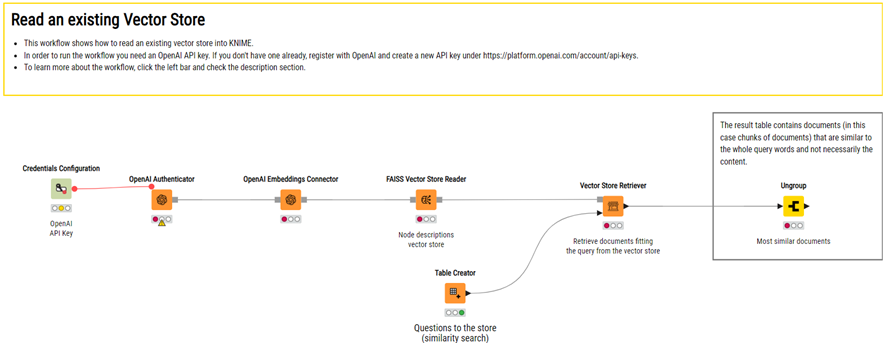

How to load an existing vector store

Downloading and running the sample workflow (pictured below) in your KNIME installation is straightforward. For the best performance, we advise using KNIME Analytics Platform 5.1 or higher.

For now, the FAISS Vector Store reader node only supports local routes. Copy the NodeDescriptions folder to a local path and point FAISS Vector Store Reader to the folder to run the workflow.

The vector store was created using a Python script and the embedding model used was text-embedding-ada-002” from OpenAI.To run the workflow, you need an OpenAI API key. If you don't have one yet, sign up for OpenAI and create a new API key at https://platform.openai.com/account/api-keys.

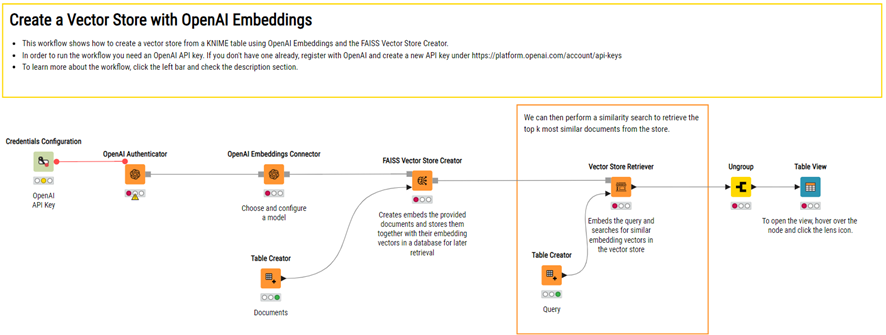

How to create a vector

Download and run the workflow (pictured below) in the latest version of KNIME Analytics Platform for the most efficient performance.

The workflow also shows how a Vector Store Retriever can be used to query the vector store for similar documents.

As mentioned in the previous example, to run the workflow, you need an OpenAI API key which can be created at:. https://platform.openai.com/account/api-keys.

Comparing Chroma and FAISS

To manage the vectors, we need the FAISS or Chroma libraries, let's make a brief comparison:

Chroma is a vector warehouse and embedding database designed from the ground up to make it easy to build AI applications with embeddings. Its main feature is that it’s designed to handle modern AI workloads, making it a good choice for applications that heavily use embeddings.

FAISS, on the other hand, is a library developed by Facebook for efficient similarity search and dense vector clustering. The FAISS library is open source, allowing developers to examine, modify, and distribute the source code.

Practical examples of solutions with KNIME, NLP, and vectors

Product recommendation: If you are working on a recommendation system, you can use vectors to represent both users and products. The user and product vectors can be fed to a recommendation model to predict user preferences and offer personalized recommendations.

Sentiment analysis: In sentiment analysis, text vectors can be used to classify said text as positive, negative, or neutral. This is useful in evaluating customer reviews, social media comments, and product reviews.

Automated text summarization: Text vectors can help identify the most relevant sentences or text fragments to generate summaries of long documents. This involves evaluating the importance of each sentence in the context of the document.

Text processing has never been so accessible

The combination of LLMs, vectors, and other AI models in KNIME is transforming the way we approach text processing and semantic understanding. With this technology, we are opening new doors in all types of applications.

Text processing has never been so accessible and full of possibilities. If you want to explore more about how KNIME and AI can boost your work in word processing and NLP, I encourage you to continue exploring these tools and technologies.

Recently, KNIME posted a webinar where Adrian Nembach, Seray Arslan and Carsten Haubold discussed this topic. I highly recommend that you not only watch it one time, but really study it. It is a gem for those of us who are excited by this topic.

Watch the webinar How to build AI-powered data apps using KNIME.

If you are interested in word processing and AI, feel free to follow me for more updates and discussions.